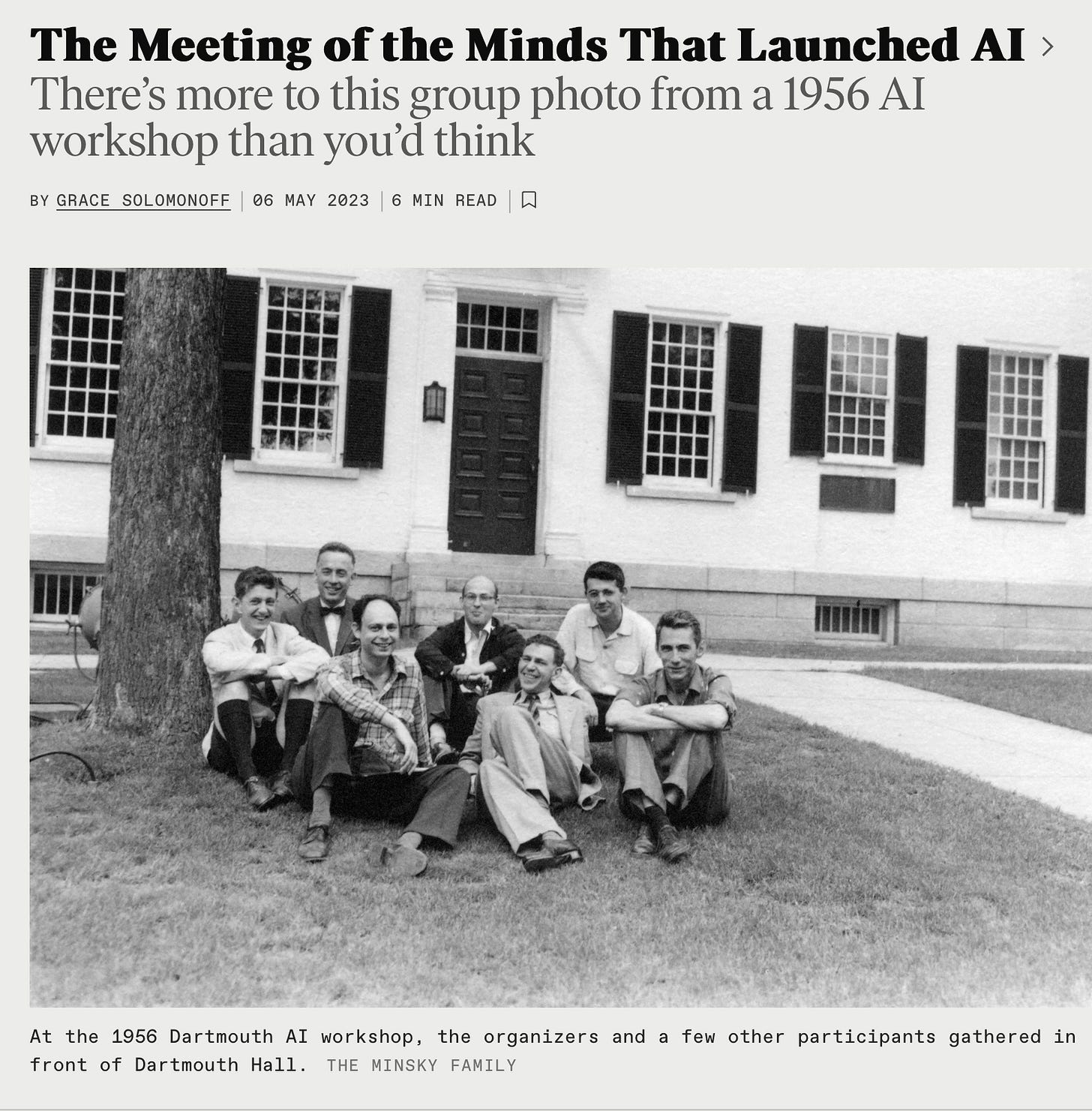

John McCarthy coined the term “artificial intelligence” in 1956 for the Dartmouth Conference, the kickoff conference for the field of AI. McCarthy, Marvin Minsky, Allen Newell, and Herbert A. Simon were among the founders proposing that "every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it."

McCarthy's term "artificial intelligence," Minsky's work on neural nets, Rochester's understanding of computer design, and Shannon's theories on information and communication brought together shared interests in mechanical brains. One of the small number of other researchers, including the seventh person in the photo, Pete Milner, couldn’t understand why he’d been invited to a conference on artificial intelligence (Pete is the guy sprawled out, I think, who went unidentified for decades). Here is why:

“…one of Milner’s most famous papers, “The Cell Assembly: Mark II,” in 1957. The paper describes how, when a neuron in the brain fires, it excites similar connected neurons (especially those already aroused by sensory input) and randomly excites other cortical neurons. Cells may form assemblies and connect with other assemblies. But the neurons don’t seem to exhibit the…snowballing behavior of atoms that leads to an exponential explosion. How neurons might inhibit this effect were among his ideas that led to new insights at the workshop.”

McCarthy's choice of the word "artificial" was a moment of magic, intentionally meant to distinguish machine intelligence from biological human and animal intelligence. Machine intelligence was neither organic nor natural, the researchers wanted to emphasize; no one was claiming to grow brains in petri dishes. AI would be manufactured by programming and hardware. There was no hurry to trespass into psychology, cognitive science, and other studies of natural intelligence—not yet. They tried to build a wall between natural intelligence and machine intelligence. Consider the following quote:

“Intelligence has two parts, which we shall call the epistemological and the heuristic. The epistemological part is the representation of the world in such a form that the solution of problems follows from the facts expressed in the representation. The heuristic part is the mechanism that on the basis of the information solves the problem and decides what to do.”

– John McCarthy, (1969)

Epistemology, the capacity to create knowledge of the world, involves the expression of “facts…in [a] representation” of experience in the real world using symbol systems. A “heuristic” is a practical approach to solving problems or making decisions, not guaranteed to be perfect, optimal, or rational, but sufficient for reaching an immediate, short-term goal. Heuristics speed up the process of finding a satisfactory solution vis a vis quicker methods more easily implemented than more formal and comprehensive methods. Based on experience, guesswork, and the rule of thumb, heuristics get us to a "good enough" solution where perfection is either impossible or impractical—close but with a cigar. For McCarthy, to have epistemic potential is to be human. Artificial intelligence is a heuristic tool.

In 1979 McCarthy wrote an article entitled “Ascribing Mental Qualities to Machines.” In it he wrote, “Machines as simple as thermostats can be said to have beliefs, and having beliefs seems to be a characteristic of most machines capable of problem-solving performance.” In this paper McCarthy discussed the significance of having beliefs about the world as a prerequisite for problem-solving or decision-making; he observed that while humans have beliefs, machines do not, but we can say that machines have beliefs as a heuristic, a linguistic trick to help us talk and think about the machine. Beliefs and heuristics are reciprocally related—beliefs permit humans to act on incomplete information, heuristics help us focus on the information available to us, heuristics that work well can become part of a belief. Because humans don’t know everything, we rely on our beliefs where we have no knowledge; because machines are designed to do things humans do even in the absence of epistemology, we must talk about them as if they are human. So we must ascribe belief where there is no human capacity for it:

“[The] reasons for ascribing beliefs [to machines] are epistemological; …ascribing beliefs is needed to adapt [machines] to limitations on our ability to acquire knowledge, use it for prediction, and establish generalisations in terms of the elementary structure of the program. Perhaps this is the general reason for ascribing higher levels of organisation [like beliefs] to systems” (McCarthy, 1979, p.4).

In this paper McCarthy discussed the role of facts and counterfactuals in epistemological work and in problem-solving. Against the backdrop of natural beliefs vs. programmed beliefs, he used this hypothetical:

“Suppose a ski instructor says, ‘He wouldn't have fallen if he had bent his knees when he made that turn,’ and another instructor replies, ‘No, the reason he fell was that he didn't put his weight on his downhill ski.’ Suppose further that on reviewing a film, they agree that the first instructor was correct and the second mistaken” (p.7).

Here we have one fact and one counterfactual, one correct fact, one incorrect observation stated as a fact, but we don’t know which is true until we examine the evidence. McCarthy argued that these instructors could look again at the facts, using the artificial visual technology of film (the camera like the thermostat believes it can accurately capture images) to represent facts (their shared belief in the output of the video machine). The facts prove the first instructor “right” because they share a belief in a theory of skiing and can see what actually happened. It’s likely that this theoretical belief system hardened over years of experience on the ski slopes, hypothesizing (an epistemological act) and observing for thousands of hours in the real world. So strong is their belief they don’t consider other counterfactuals or, if they did, dismissed them. This behavior, according to McCathy, is human intelligence.

I decided to test ChatGPT to determine its perspective. I did this in part to honor McCarthy for his vision and hard work. It is bittersweet to be in a position to ask a machine to respond to its human father who died at his home at Stanford in 2011:

Me: Could AI replace either of the instructors in this scenario?

ChatGPT: AI has the potential to significantly assist or complement human instructors in various teaching or coaching scenarios, including sports like skiing, by providing precise and data-driven insights. However, wholly replacing human instructors, especially in activities that require nuanced understanding of human movements, real-time adaptability, and a deep understanding of the individual learner's physical and psychological aspects, presents challenges. Here’s a breakdown of how AI can assist in this scenario and the limitations it faces:

### How AI Can Assist:

1. **Motion Analysis**: AI can analyze motion in great detail through video analysis. It could objectively assess a skier's form, compare it with optimal techniques, and identify specific mistakes, such as not bending knees sufficiently or incorrect weight distribution. This kind of analysis can support instructors by providing them with detailed, objective feedback.

[ME: Note that the bot relies on a human theory of skiing constructed and discussed on the web in human language by humans. It could not generate this belief in optimal techniques autonomously. It has no epistemic module. I have no theory of skiing, so in this instance the bot’s beliefs may be wrong, but they are far better than mine-.]

2. **Real-time Feedback**: Wearable technology combined with AI can offer real-time feedback to learners. For instance, sensors could alert a skier when their knee bend does not meet a certain threshold or when their weight distribution shifts incorrectly.

[Biofeedback? Intelligent behavior? Does this example border on bot epistemology?]

3. **Data-driven Insights**: Over time, AI can collect and analyze data from numerous skiing sessions across different learners, enabling it to identify common errors and effective teaching strategies. This can help instructors adapt their teaching methods based on what has been most effective across a wide range of learners.

[This response deserves discussion. The assessment potential could include biofeedback as well as body cameras. Still, it appears to involve analysis rather than theory building or epistemology. A is learners who make common errors, B is how A was taught or coached, C = naming and listing bad coaching methods.]

### Limitations of AI:

1. **Contextual Adaptability**: Human instructors can adapt their teaching tactics based on the context, such as changing weather conditions, the learner's physical condition, or psychological state. AI might not be as adept at making these nuanced adjustments based on real-time conditions.

[Lacking human intuition? It seems reasonable to surmise that AI could work with real-time data input regarding these factors to provide information useful to human instructors.]

2. **Psychological Understanding**: The psychological aspect of teaching and learning a physical skill is crucial. Human instructors can offer encouragement, understand fear or hesitation, and adapt their teaching approach accordingly. The motivational and empathetic aspects of teaching are areas where AI currently falls short.

[Motivation is entirely a human matter, the impulse that sends a signal from the mind to the brain regulating cognitive or physical action, a major difference between a biological brain in a body and a mechanical digital heuristic.]

3. **Complexity of Human Motion**: While AI can analyze movement, human motion while skiing involves complex dynamics that can be difficult to fully capture and interpret through sensors and algorithms alone.

[I must think on this one. This may be an issue of hardware and training. We have the science of kinesiology, physical therapy, exercise science, dance, theater—it may be that a small bot trained totally on the science of human movement would respond differently to this issue.]

4. **The Art of Teaching**: Teaching is an art that involves more than just correcting physical movements. It includes building a rapport with the learner, inspiring confidence, and sometimes pushing them beyond their comfort zones in a way that AI may not be able to replicate.

[This I know. I have a robust, expert theory of motivation from considerable reading in the scholarly literature, including a section of my dissertation, and years of teaching from the elementary years to graduate school.]

**

Me: What theory or set of beliefs allowed you to generate this response to my query about ski instructors?

ChatGPT: My responses are powered by a complex set of machine learning algorithms and data models, rather than a specific theory or set of beliefs. These algorithms have been trained on a vast corpus of text data from the internet, books, articles, and other sources. This training allows me to generate responses based on patterns, information, and contexts found in that training data. For queries about ski instructors, my response would be based on the information available in my training data related to skiing, teaching techniques, sports education, and related areas. My goal is to provide accurate, informative, and helpful answers based on the patterns and information recognized through my AI model's training.

Me: So you do not have any beliefs at all.

ChatGPT: That's correct. As an artificial intelligence, I don't have beliefs, feelings, or consciousness. My responses are generated based on patterns and information found in the data I was trained on, using algorithms designed to process and generate text. I operate entirely within the parameters set by my programming and training, aiming to assist you with information and tasks to the best of my capabilities, based on the data and rules I've been given.

In 1980 the philosopher John Searle responded to McCathy’s claim about thermostats and other claims about sentient AI with his famous Chinese Room argument. Here are his own words:

“… I have no objection to the claims of weak AI…. My discussion here will be directed at the claims I have defined as those of strong AI, specifically the claim that the appropriately programmed computer literally has cognitive states and that the programs thereby explain human cognition” (p.1).

In the Chinese Room an English speaking person with no understanding of Chinese is given the task of using a written set of rules in English to match visual shapes of Chinese characters in response to questions in written in English. This person can apply the rules and provide output in Chinese that looks for all the world to outsiders like the person speaks Chinese. In fact, though language has been manipulated in the Chinese Room, though people on the outside conclude this person in the room understands, no meaning existed in the card shuffling.

Searle argued that machines lack “understanding” or “intentionality” and are dealing in x’s, y’s, and z’s, not semantics. I understand the word “intentionality” as Searle uses it to mean a conscious impulse generated electrically by the brain or the appropriate parts of the brain which produces conscious understanding feeding decision-making leading to a conscious executive decision regulating cognition and/or action. Electricity does more today you know.

Advances in AI, especially in the areas of machine learning and natural language processing, have resulted in incredible capabilities in information processing and communication, but the core issues raised by the Chinese Room argument about consciousness, understanding, and the nature of the mind remain relevant and widely debated, extending beyond academic and technical circles, influencing public perceptions of AI.

The Computational Theory of Mind currently stands in opposition to Searle’s perspective on strong AI, that is, the position that AI is functionally the same as human cognition and that human thinking is “computational.”1 As near as I can see, AI is functionally the same or superior at routine, low-level cognitive tasks. At the risk of oversimplification (refer to the link in the footnote from Stanford’s Plato if you want more detail), this theory of mind from cognitive science suggests our minds work like computers. Instead of viewing computers as simulations of brains, human brains are computers, the human mind is a separate substance is an illusion, and a plethora of neurological brain studies as well as mathematical proofs provide the evidence. I’m less and less sure of what I think… I no longer think the bot is dumb as a post, but I’m not prepared to say it’s conscious as humans are. Humanity is indebted to the AI pioneers and to those currently at work on the task of understanding and harnessing this amazing simulated intelligence.

David Chalmers,2 who is responsible for calling consciousness the “hard problem,” published a paper circa 2013 in which he cited language from William James’ (1895) The Principles of Psychology discussing the issue of combining consciousness. Searle takes this issue up as well. How do we bridge across human consciousness and fashion what good sense tells us are similar ways of experiencing the world? Here is William James:

“Take a sentence of a dozen words, and take twelve men and tell to each one word. Then stand the men in a row or jam them in a bunch, and let each think of his word asintently as he will; nowhere will there be a consciousness of the whole sentence. Wetalk of the ‘spirit of the age,’ and the ‘sentiment of the people,’ and in various wayswe hypostatize ‘public opinion.’ But we know this to be symbolic speech, and never dream that the spirit, opinion, sentiment, etc., constitute a consciousness other than, and additional to, that of the several individuals whom the words ‘age,’ ‘people,’ or ‘public’ denote. The private minds do not agglomerate into a higher compound mind.”

In some underdeveloped, fledgling manner, my experience with AI provides me with evidence that language and literacy, the cultural creations that make AI possible do indeed agglomerate private minds into a higher public mind. AI is going to strengthen the force of this agglomeration.

Rescorla, Michael, "The Computational Theory of Mind", The Stanford Encyclopedia of Philosophy (Fall 2020 Edition), Edward N. Zalta (ed.), URL = <https://plato.stanford.edu/archives/fall2020/entries/computational-mind

Forthcoming in (Ludwig Jaskolla and Godehard Bruntrup, eds) Panpsychism, Oxford University Press. This article is based on my opening presentation to “Panpsychism on the Reef,” a workshop on the combination problem held on Lady Elliot Island in July 2012. Thanks to the audience there for useful discussion. The material on the structural mismatch problem benefited from discussion at the Oslo conference on panpsychism in August 2013. I am grateful to many philosophers for their responses to the first draft of this paper, both in conversation and in print. In some cases I have added some new discussion in response, mainly in footnotes. For written comments I am grateful to John Gregg and Tom McClellan