Comprehensible Input, Comprehensible Information, Comprehensible Expertise

On Knowing about Knowing

“Some of the newer adaptations, such as BioGPT, …after training on PubMed articles, could have major implications for the future of medicine and medical research. …[It] is important to consider the ethics of use and prioritise responsible and beneficial applications that serve the best interests of society” (Li, Moon et.al., The Lancet, VOLUME 5, ISSUE 6, E333-E335, JUNE 2023).1

Lancet Digital Health is an open access journal intended to bridge the space between medical research and practice with a global reach. In July, 2019, the journal published an article2 summarizing all of the ways in which AI could strengthen medical care in resource-poor areas of the world. In general, the field was waiting hopefully for the appearance of AI that would be capable of unsupervised learning and the creation of intelligent responses to assist in pattern recognition and classification problems facing practitioners who could use help in early detection, diagnosis, and medical decision-making—professional tasks with some similarities to those facing educators.

In a Lancet editorial published in July, 2023,3 the advent of generative AI was called a “technological renaissance” fueling transformations both in clinical workflow and in medical training. For example, in the editorial a doctor is described as in need of access to data about a cohort of females aged 45-54 regarding mammograms and medical charts. Instead of searching for data for each individual, compiling the data, and then analyzing the findings, the doctor is now able to prompt a trained bot with a single question and get a report complete with images and explanations written as comprehensible information. Again, the similarity to teaching is clear. A reading specialist with a need to discern patterns in fine-grained assessment data to respond to a research question under investigation by a professional teacher research team can make use of a bot trained and updated locally.

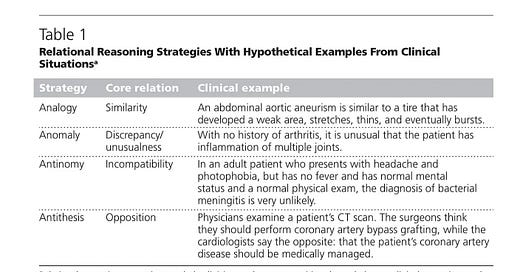

High expectations for AI now in its generative period afford enriched opportunities for professionals to hone their relational reasoning skills. For a refresher on Patricia Alexander’s model of relational reasoning, here is a table published in an earlier ltRRtl post:

The Lancet website has been providing exercises for professionals to practice their relational reasoning skills using pre-AI technology for some time now. On a page listed as “Picture Quiz,” (example coming up below) visitors can find monthly challenges of obvious value to practitioners and anyone else with an interest. The quiz presents a scenario and calls for selecting a treatment. The most recent quiz involves a 52-year-old woman with intermittent bouts of vertigo. I took the quiz and failed miserably, getting the wrong answer twice. Even I could reason that brain surgery would not be the first choice to resolve the issue. The Epley maneuver was my first choice. Win or lose at multiple-choice roulette, everyone gets access to a free article with data and explanations for the best answer. Routing in a natural language bot, perhaps with an enhanced multiple choice protocol wherein quiz takers tell the bot their rationale and then receive personalized feedback rather than “wrong, try again,” would be, well, a natural. Imagine what GPT4 could do for the NAEP reading test.

Nonetheless, AI is a double edged sword in human medicine. There has long been concern about the spread of misinformation on Twitter where bona fide medical voices vie with conspiracy theorists to get high-quality information to people, especially prevalent regarding the COVID pandemic. Twitter will undoubtedly form part of the corpus of texts used to train general conversational bots available to the public. Training will not be limited to PubMed articles. It will be up to the user to sort out information from misinformation unless restrictions are placed on the quality of texts put into bot training batches. The bot can’t do that sorting.

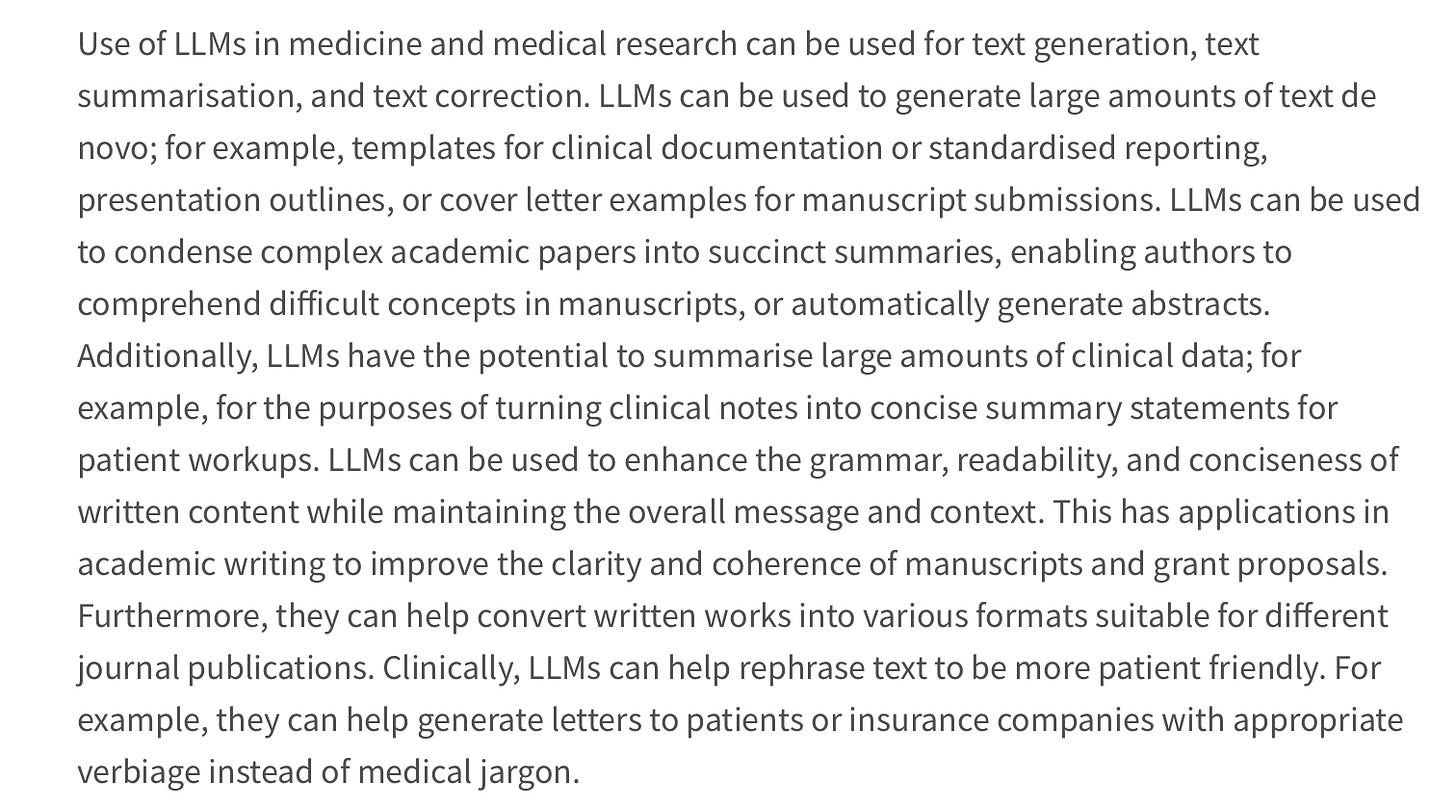

On the other hand, AI affords improvement going well beyond convenience and efficiency. These improvements will have measurable effects on the quality and effectiveness of medical care. Witness (Li et al., 2023):

In all of this activity, as in all professions like teaching wherein a vulnerable client relies on professional expertise to make important decisions, the ethics of AI’s trainers are critical, including decisions on which texts to include in the training corpus during unsupervised training when the machine produces its decision-making links in nodes relying on meaning-language patterns it will then use to generate responses to prompts. Li, Moon et al. (2023) are highly critical of AI training favoring wealthy, English-speaking nations. Like the field of education, implementation of protocols and deployment of resources in medicine are contingent on local context. Circumstances in high-poverty schools with various languages in use differ from those in affluent schools. Witness:

“There is a significant under-representation of perspectives from other regions of the world, leading to mechanistic models of health and disease biased towards understandings of these processes of high-income countries. For example, a clinician in Africa using LLMs to generate an outline of a presentation for treatment options in diabetes could lead towards focusing on treatment paradigms applicable only in high-income countries” (Li et al., 2023).

*****

MIT offers a course titled “Safeguarding the Future” in its MediaLab, a graduate course for masters and PhD candidates in the Media Arts and Sciences program. Leading experts guide discussions of how to safeguard the world against our greatest threats. Topics range from the perils of pandemics and nuclear proliferation to failures to address climate change, to technological stagnation and AI. The following abstract describes a study published within the past few months (the article is prepublication and undated but includes recent dates in the reference list) where non-scientists—students taking the course—used the bot to figure out how to make a lethal virus:

“Nonproliferation measures” as a phrase contains within it echoes of the Cold War, but the modifier “promising” suggests that the destructive aspects of AI are being recognized and potentially managed—at least MIT and leaders in the medical field are on top of the issues of AI training, licensing, access, and application. To what degree is the field of education engaged in a nonproliferation strategy?

*****

The Pew Research Center published results of a qualitative survey of experts in June, 20234, asking for their fears and hopes for generative AI. Of the many topics probed on the survey, one in particular seems relevant to education: Human knowledge. How is AI going to impact human consciousness and its byproduct, knowledge? On one hand, given the ease with which comprehensible expertise can be dispensed, fears hanging on from the olden days of the Internet (googling directions for printing a gun, making a bomb, etc.) are increased exponentially when anyone of moderate competence can learn enough to fabricate a virus in an hour. On the other, generative AI can relieve medical professionals of repetitive work, help students pass a course with good understanding which they would have otherwise failed, etc.

My concern here derives from my perception that conventional education operationalizes teaching knowledge as the primary objective—what a word says, how a fact fits within a theory, what a thing is by definition, the steps in a process, the bones in the hand, dates in history, etc. Don’t get me wrong. I have nothing against knowledge, and I understand its primacy. But if knowledge remains the primary objective of education in a banking model, problems with AI in public school classrooms are likely to proliferate. At best students who use AI will be suspected as cheaters; at worst they will be expelled.

I find that AI demands that educators carefully distinguish between knowledge and epistemology; knowing something is not the same as knowing how to come to know that thing. It’s interesting that an ambiguity in the word learning permits two teachers on opposite poles of this dichotomy to think they speak of the same construct when they don’t. In my opinion, vague understandings of this distinction have created the Great Debate, Whole Language vs. Phonics, Balanced Instruction vs. Direct Instruction. Direct, systematic, prescriptive phonics pedagogy trains learners to know something—this letter makes this sound and here is how the sound ought to blend with the next sound. Reading Recovery teaches learners to apply what they already know as a resource for coming to know what they don’t—these letters represent this word which makes sense and looks right. By the way, notice these letters.

Knowing how to come to know something, aka epistemology, is in itself complex. The same is true of knowledge. Knowledge itself is the product of epistemology. In other words, what I know I learned by using my epistemic belief system to guide my thinking. If, for example, my epistemology rested on the belief that I’ve gained knowledge when I get the right answer on a test, my learning goal was not deep knowledge to keep forever, but surface knowledge to recall in the moment.

At the risk of oversimplification, consider two major perspectives on epistemology handed down from the early Greek philosophers over centuries in the Western world: Empiricism vs. Rationalism. Rationalism in the modern world originates in Descarte’s philosophy wherein he doubted reality and the world accessible through the senses; blue for you may be green to me. But he could not doubt that he doubted the reality available to him through his senses. “I think; therefore, I am,” he wrote. “Cogito, ergo sum.”

For him, there must be iron clad evidence in the quest for certainty. Because he could be certain only of his thinking, not of the world he was thinking about, his thinking is the foundation of rationalism. The path to knowledge is through reason using whatever equipment came with your brain at conception, not through sensory experience. Since the rationalist teacher operates under the assumption that knowledge exists as a result of reasoning from truths, students must be “taught the truth” before they can reason on their own to make their own knowledge—hence, direct instruction in particulars precedes knowledge building. Bloom’s taxonomy in full bloom. From this perspective, since conversational AI is unreliable as a source of information and since it seems to reason for the learning, it is a corrupting influence and must be banished from the classroom.

Empiricism, on the other hand, is represented by John Locke’s notion of the tabula rasa, the human mind as a blank slate written upon by the senses. Knowledge is made through experiences and interactions with the physical and cultural world. Educators of various stripes from John Dewey to B.F. Skinner have taken the position that knowledge is made through thinking around experience. All of the empiricists share this assumption: Experience can be guided.

The role of the teacher as an empiricist is aptly summed up in David Pearson’s notion of the gradual release of responsibility. From this perspective, epistemology involves students in guided and informed risk-taking during self-regulated experiences, learning to manage confusion, learning to self-supervise the construction of knowledge. In this view, conversational AI itself has no human knowledge because it is incapable of experiencing the world as humans do, and, as near as I can tell from experts, never will be able to do so. AI is not a knowledge dispenser.

Only humans, not bots, are capable of epistemological work from both the rationalist and empirical perspectives, and therefore bots produce output that cannot count as knowledge—the output is more aptly characterized as raw information to put through an epistemic filter. Pragmatists like Charles Pierce along with current voices on sociocultural epistemology (cf. Miranda Fricker) focus on learning experiences in the presence of other human participants as central to gaining expertise. Fricker coined the term “epistemic injustice” to describe learners who are denied opportunities to “develop their capacity as a knower.”

Students can build their capacity to know through guided experiences using productive functions of the bot as a mechanical tool like a calculator within this uniquely human work of becoming an expert. AI challenges educators to sharpen their perspective on epistemology and find productive ways to teach children to use the bot as a knowledge-building tool. It’s becoming clear in the few short months since ChatGPT transitioned from a fiction to a fact: It would be epistemically unjust to ignore opportunities for children to learn ways to accommodate generative AI in their emerging epistemologies.

https://www.thelancet.com/journals/landig/article/PIIS2589-7500(23)00083-3/fulltext

https://doi.org/10.1016/S0140-6736(19)30762-7

https://doi.org/10.1016/j.lanepe.2023.100677

https://www.pewresearch.org/internet/2023/06/21/as-ai-spreads-experts-predict-the-best-and-worst-changes-in-digital-life-by-2035/?utm_source=Pew+Research+Center&utm_campaign=65f3398794-Weekly_7-29-23&utm_medium=email&utm_term=0_-65f3398794-%5BLIST_EMAIL_ID%5D

This piece is awesome!

Particularly the conclusion. A feast for the Mind.

I love the dissection of different theories of knowledge in relation to AI.

Lots to build on here!