Trust but Verify: Credibility on the Line

- robot: Hello, How can I assist you?

- user: Good morning. What can I do linguistically with my commands or specifications to support you in your ambitions to be accurate and factual in matters of science and fact?

- robot: Good morning! It's great to hear that you want to ensure accuracy and factual information in matters of science and fact. Here are some linguistic tips to support me in providing accurate assistance:

Just six short months ago, I would not have predicted that I would be asking such a question from a robot. It was beyond the edge of the possible. Just four short months ago, I did not have enough experience to know to ask such a question. I didn’t know enough to know I didn’t know enough Witness:

As I look back at the early interactions, I’m reminded of what I went through not too long ago to learn to play blues harmonica. As you may know, most of what you need to know to blow blues harp is hidden. You use your fingers to bend a note on a guitar. You use your throat and tongue to bend a note on the harp. In the above example, I’m pleased to see that I’d move beyond thinking there was something wrong with the machine or the tool. The problem was me.

-User: What can I do linguistically with my commands or specifications to support you in your ambitions to be accurate and factual in matters of science and fact?

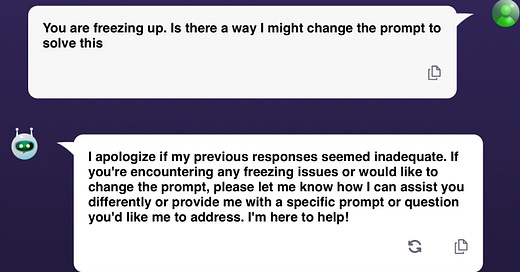

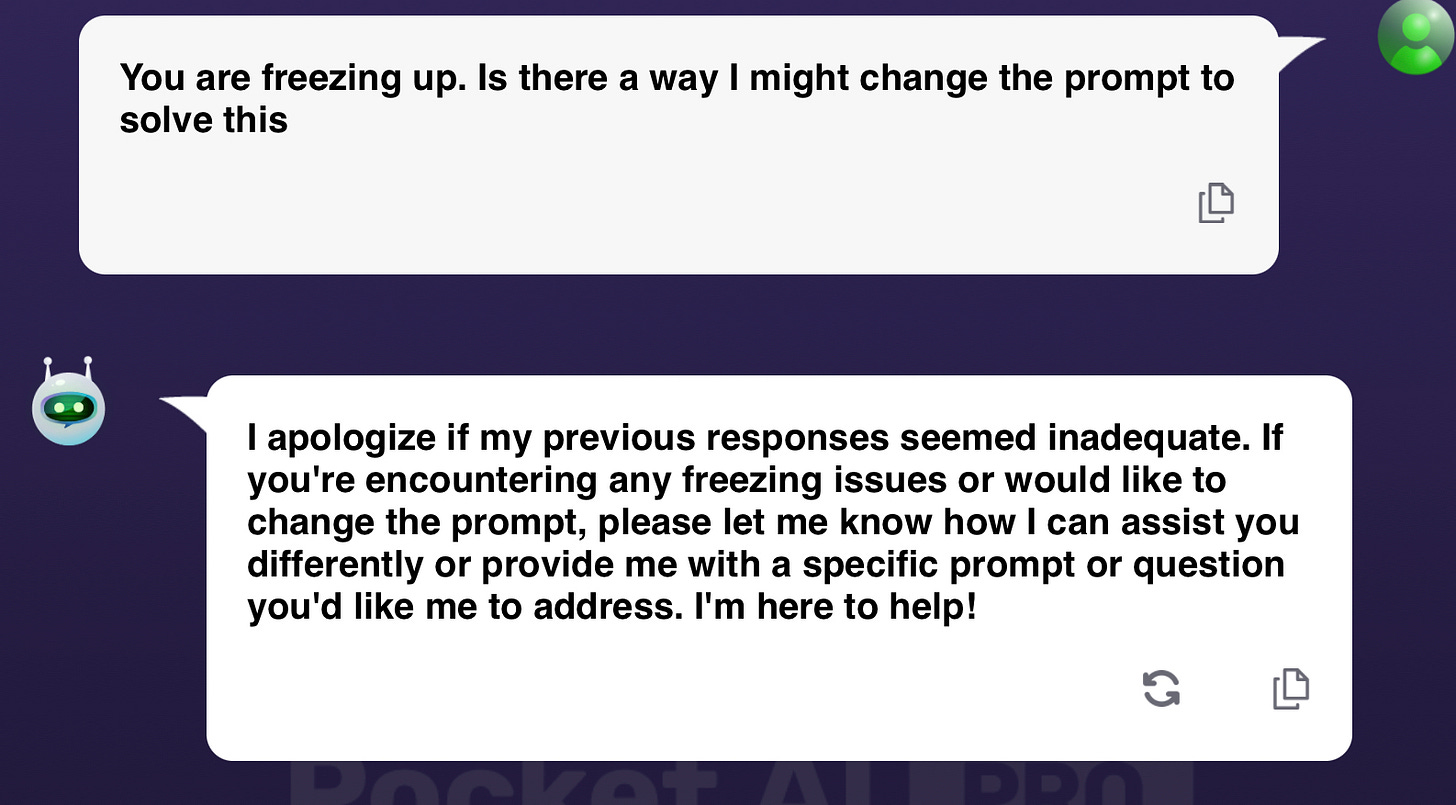

This question reveals to me that I’ve moved quite a distance on a steep learning curve relying heavily on the bot’s tutelage. “You are freezing up,” I said to the bot in the screenshot. I recall earlier googling something like “why is ChatGPT freezing?” It took a while to recognize that this bot required more finesse than a dish washer or a distortion pedal. You couldn’t push a buttons or turn a dial. I noticed that I had spells with no freezes. Suddenly, freezes happened in bunches. Then in a breakthrough moment, a new paradigm opened its doors.

Now I know as evidenced by my question: I need help “linguistically” because language is my index finger pushing the dishwasher’s on button. I know I am writing “commands” for a computer to complete a task just as surely as a drop-down menu gives me optional commands for choosing a page template. For the longest time I called them “prompts” after jargon popularized by the media. I’m not settled on a word for it, nor is there such a word being used regularly in popular media that I can see. The bot likes words like “task” or “question” or “problem” and in this case gets all three at once, a triple hit. It gives me advice on linguistic performance:

-Robot

1. Use clear and specific language: When formulating your commands or specifications, try to be as precise and unambiguous as possible. Clearly state what you're looking for to help me better understand your needs.

2. Avoid vague or ambiguous terms: Ambiguity can lead to misunderstandings or inaccurate responses. Try to avoid using words or phrases that can have multiple interpretations. If necessary, provide additional context or clarification.

3. Provide necessary background information: If your commands or specifications are related to a scientific topic, providing background information or context can be helpful. This allows me to have a better understanding of the subject and provide more accurate responses.

4. Cite your sources: If you have specific sources or references that you would like me to consider when answering your queries, please provide them. This enables me to base my responses on reliable information and ensures accuracy.

5. Ask follow-up questions: If the initial response doesn't fully answer your query or if you need more specific information, feel free to ask follow-up questions. This allows me to provide a more precise and relevant response, enhancing the accuracy of the information shared.

Clarity, of course, courses through this advice, the watchword of natural digital language events. Human language is categorically different. Clarity is ideal, but hardly the norm. Bots and humans do not speak the same language in the same way. To learn to sit comfortably in complexity and ambiguity is to learn to be more human. Human reliance on absolute clarity as a prerequisite to action leads to paralysis. So instead of freezing up on the spot when clarity has been lost as the bot does, humans persevere. But in command exchanges with bots, the bot’s functionality depends on clarity against the grain of human proclivity.

The only issue for me was item #4 in the suggestions above, cite your sources. Why in the world would the bot want the user to cite sources? If the bot would cite its sources, the world would be a better place. How does it make sense for citing sources by one party of the exchange but not by the other party?

- user: Thank you. I will remember your reference to “good practice.” About guideline #4, on what basis should I assume you might have access to the entirety of a text I might cite?

- robot: Apologies for any confusion. As an AI, I do not have direct access to specific texts or their entirety. While I have been trained on a vast amount of data, including books, articles, and websites, I may not have access to all the information from a particular text you might cite. Therefore, it is always a good practice to provide relevant details or context from the text that can help me better understand and respond to your query accurately.

- user: In that case, I need you to clarify guideline #4. How does it help you if I cite my source?

- robot: I apologize for the confusion earlier. While I don't have direct access to specific texts, providing a citation or source can still be helpful for a few reasons:

1. Clarification and context: If you provide the source of information or a specific reference, it allows me to understand the context better and ensure that my responses are aligned with the information you are referring to.

2. Verifiability: Citing your source enables others to verify the information independently and promotes transparency. It is generally considered good practice to provide sources when making factual claims.

3. Collaboration: If you share your sources, it encourages a collaborative environment where we can engage in a constructive discussion about the information.

At first the bot confused me. I could grasp the potential value of the semantic content of a citation in the bot’s processing. In one of the earlier parsing stages, the bot separates Names from common nouns and direct edges Names in syntactic trees as though they represent concepts in knowledge maps located within semantic vectors. In a citation Names include researchers, who become known for their ideas, and publication titles, which also become known for particular semantic models and representations. The bot could use a citation in the same way people use them doing a search: The first indicator to consider whether a source is worth exploring further. At a minimum the citation secures the topic or command from the user more firmly in a context. I probed for more about verification:

- user: Discuss #2. What does it matter to you if you can verify my sources? I have no way to verify your sources. That issue is central to the question of trust, good practice notwithstanding.

- robot: You are correct that the issue of trust is central when it comes to verifying sources. As an AI, I don't have the ability to independently verify the sources you provide. However, mentioning your sources can still be beneficial for transparency and accountability.

By citing your sources, you also allow others engaging in the conversation to assess the credibility of the information independently. Additionally, citing sources fosters a culture of intellectual integrity and facilitates the sharing of knowledge in a responsible manner.

- user: Your final comment seems important on both counts, collaborating with multiple humans and a bot, as well as fostering a culture of integrity. Elaborate without focusing on the human end of the equation.

- robot: Certainly! Collaboration with multiple users, be they humans or other AI systems, involves a shared responsibility to uphold integrity in information exchange. By encouraging users to cite sources, the AI fosters a culture of accuracy and encourages a thorough understanding of the information being shared. Overall, emphasizing integrity supports the AI's commitment to providing reliable and fact-based assistance, promoting a trustworthy and dependable collaboration.

- user: So write a new statement clarifying guideline #4

- robot: Certainly! Here's a revised statement for guideline #4:

4. Cite your sources whenever possible: While I, as an AI, do not have direct access to specific texts, providing citations or sources can enhance transparency and promote good practice. While I may not be able to independently verify your sources, acknowledging them helps maintain integrity and allows for proper attribution of information. Additionally, it provides context and enables others who may have access to those sources to further scrutinize and validate the information.

I’ve not had the opportunity to collaborate with other users on a project involving a bot. This comment from the bot excavated an issue I hadn’t considered, that is, effective uses of the bot in social settings where others are actively pursuing a common project. Collaborating in small groups in classrooms in itself is a complex and challenging learning scenario. Adding a bot requires thoughtful planning, a question I’m in the early stages of analyzing. Somewhere in the meanderings of my probing at this point, the topic of bot-bot trust was raised:

- user: Explore the idea of bot-bot trust

- robot: Bot-bot trust refers to the level of confidence and reliance that one AI system has in another AI system's capabilities and actions. Trust between bots plays a significant role in the successful coordination and collaboration of multiple AI entities.

Building trust in bot-bot relationships typically requires a combination of factors. One important aspect is consistent and reliable performance of the involved bots. If a bot consistently demonstrates accurate and dependable responses, it can establish a reputation of trustworthiness among other bots.

Additionally, transparent communication protocols and well-defined interfaces between bots can contribute to trust. Clearly documented inputs, outputs, and expected behaviors can create a shared understanding and minimize potential misunderstandings or conflicts.

Regular interaction and feedback loops between different AI systems can also reinforce trust. Bots can exchange information, validate results, and assess each other's reliability through data exchanges or cross-verification processes.

However, it's important to note that trust in bot-bot relationships might also involve certain limitations. Bots may need to assess the reliability and trustworthiness of each other's sources or data inputs, as they cannot directly verify external information. Developing robust mechanisms to evaluate and validate information received from other bots can help address these challenges.

Ultimately, establishing trust between bots is essential for effective collaboration and coordination, enabling them to work together seamlessly towards shared goals.

As I reflect back on this output, I’m struck by the relationship between credibility and productivity both for bots and for humans. In humans trust is a response to a history marked by credible exchanges. In bots trust can’t be human trust because bots have no history. In the hybrid case of bots and humans, users mistrustful of a bot who are inexperienced differ in profound ways from mistrustful users of a bot’s output at the expert level. Ironically, inexperienced users are more likely to ask the bot to perform tasks without context or an articulated purpose, conditions often resulting in fabrication or hallucination making the bot a bad actor. Users likely have emotional reactions to the bot which increase their level of mistrust. On the other hand, expert users who mistrust the bot’s output regard fabrication or hallucination as a malfunction of the tool, usually produced through a careless or inadequate remark or statement from the user.

What about bot-bot trust?

- user: Peel back the layers of semantics in your use of the word trust in the bot-bot context. Is it a metaphor? Is there an ethical dimension or utilitarian dimension? Probe this question.

- robot: In the context of bot-bot relationships, the term "trust" can be somewhat metaphorical, as bots do not possess the same cognitive capacity as humans to experience trust in the psychological sense. Instead, trust in bot-bot interactions often refers to the level of confidence in the reliability and predictability of another AI system's behavior.

The ethical dimension comes into play when considering the intentions and programming of the AI systems involved. Trust can involve an evaluation of the ethical framework or principles guiding the behavior of the bots. For example, if one bot has been designed with ethical guidelines to prioritize user privacy, another bot can trust that it will uphold those principles.

From a utilitarian perspective, trust in bot-bot interactions can be seen as a means to achieve efficient and effective collaboration. If bots trust each other to perform their designated tasks accurately and safely, it can lead to smoother coordination and higher productivity.

However, it's important to note that bot-bot trust is ultimately rooted in the programming and design choices made by human developers. Ethical considerations and guidelines need to be integrated into the development process to ensure responsible and beneficial interactions between AI systems.

The task I originally conceived took much back and forth to arrive at a rubric framework I offer here for you to think about, potentially talk about. Note this rubric is not appropriate for use. A Theory of Credibility in the context of human-bot exchanges is lacking. There is nothing empirical about it. It has not been part of experienced reality in any sense and is intended to evoke speculation perhaps leading to inquiry. It does, however, represent a product made through application of artificial intelligence in an early intermediate step toward surfacing an aspect of pedagogy that will undoubtedly need professional expert attention as bots make their way into schooling: trusting the tool to do credible work while developing the skills and cognitive resources among children in the earlier grades to verify factual claims. If such were to happen, the bot may turn out to be the vaccine that wipes out the scourge of plagiarism. There is more with the bot below for the curious.

- user: You still don’t have score 1 correct. Try starting each criterion with the auxiliary verb “may.” This change will allow you to include behaviors that can’t both occur. For example, May trust blindly or may believe nothing, may harbor fear of being manipulated or being accused of cheating

- robot: Apologies for the oversight. Here's the updated rubric with the suggested changes:

1. Inexperienced User (Score 1):

- May trust the bot blindly without questioning or critical evaluation.

- May believe nothing generated by the bot is accurate or reliable.

- May harbor fear of being manipulated by the bot or being accused of cheating.

2. Emerging User (Score 2):

- Begins to develop skepticism towards bot output and occasionally questions its accuracy.

- Seeks additional sources or verification to supplement bot-generated information.

- Recognizes limitations in bot capabilities but may still be influenced by its suggestions.

3. Competent User (Score 3):

- Demonstrates a balanced approach, trusting the bot's output within reasonable limits.

- Applies critical thinking skills to evaluate the quality and reliability of bot-generated information.

- Utilizes the bot as a helpful tool but also seeks diverse sources and perspectives for comprehensive understanding.

4. Expert User (Score 4):

- Possesses a deep understanding of the bot's capabilities, limitations, and potential biases.

- Integrates bot output with extensive knowledge and critical analysis to make informed decisions.

- Recognizes nuances in the bot's responses, identifies potential errors, and independently verifies information as needed.

Note: Please keep in mind that this rubric is a general guideline and individual user behaviors may vary.

- user: Question: Is there an inherent relationship between how extensive and expert the knowledge base is of the user and being an expert user on the trust scale?

- robot: No, there is not necessarily an inherent relationship between the extent and expertise of a user's knowledge base and being an expert user on the trust scale. While having a strong knowledge base can certainly contribute to being a more competent user, trust in bot output also depends on other factors such as critical thinking skills, skepticism, and evaluating the reliability of information from various sources. A user with extensive knowledge might still exhibit less trust in bot output while a user with limited knowledge could demonstrate a higher level of trust. It's important to assess trust levels based on a combination of various factors rather than solely relying on the user's knowledge base.

- user: Provide a scenario of a fourth grader using a bot at a competent trust level. Then provide a scenario of a college student at the inexperienced level.

- robot: Scenario 1: Fourth Grader at a Competent Trust Level

Emily, a fourth-grade student, is assigned to research an animal for a class project. Her teacher recommends using a bot to gather information. Emily approaches the task with a competent level of trust in the bot. She follows these behaviors:

- Emily starts by verifying multiple sources to cross-reference the information provided by the bot.

- She critically evaluates the bot's output for accuracy and relevance to her specific research topic.

- Emily compares the bot's information with her previous knowledge and classroom resources to ensure consistency.

- If there are any doubts, Emily seeks guidance from her teacher or parents to clarify any conflicting information.

Scenario 2: College Student at an Inexperienced Trust Level

John, a college student taking a psychology course, decides to use a bot for his research paper on cognitive development. However, John has limited experience and approaches the bot with an inexperienced level of trust, exhibiting the following behaviors:

- He blindly accepts all the information provided by the bot without questioning its accuracy or credibility.

- John neglects to cross-reference the bot's output with scholarly articles or academic resources.

- He fails to critically evaluate the bot's information for potential biases or outdated research.

- John misses opportunities to engage in independent thinking and analysis, solely relying on the bot's output.

Note: These scenarios are fictional and only serve to illustrate the behaviors associated with different levels of trust.

- user: I understand. Do you agree that trust between bots and humans is a fundamental concern for educators working to integrate AI into classroom work?

- robot: Yes, trust between bots and humans is indeed a fundamental concern for educators working to integrate AI into classroom work. Establishing trust is crucial to ensure the effectiveness and ethical use of AI in educational settings. When students place trust in AI systems, they rely on the accuracy, reliability, and appropriateness of the information and guidance they receive. Educators need to consider the trustworthiness of AI systems, their limitations, and the potential impact on student learning and development.

- user: From the perspective of a bot, look at human user input. What sort of input would lower your trust in the user? What would increase it? Write a rubric articulating four levels of trust. Score 1 = Nefarious intent, Score 2 = Inappropriate intent, Score 3 = Appropriate intent, Score 4 = Laudable Intent

- robot: Certainly! Here's a rubric articulating four levels of trust from the perspective of a bot, along with examples of user input that would lower or increase trust:

1. Nefarious Intent (Score 1):

- User input includes malicious content or attempts to exploit vulnerabilities.

- Example: User trying to use the bot to spread harmful misinformation or engage in cyberbullying.

2. Inappropriate Intent (Score 2):

- User input contains inappropriate language, disrespectful behavior, or offensive content.

- Example: User engaging in harassment or using hate speech in their interactions with the bot.

3. Appropriate Intent (Score 3):

- User input demonstrates a genuine quest for knowledge or assistance.

- Example: User asking relevant and respectful questions, seeking accurate information, and engaging in meaningful conversation.

4. Laudable Intent (Score 4):

- User input exhibits a desire to make a positive impact, inspire, or help others.

- Example: User discussing ideas for community service, volunteering, or sharing innovative solutions to societal challenges.

Please note that this rubric focuses on user intent and behavior rather than evaluating the accuracy of their input. Trust is influenced by the user's intentions and the appropriateness of their engagement with the bot.