Simulated Writing with Integrity: Engaging Creative Intelligence through Empathy

“For the longest time, the general belief was that creativity is a positive force, if not a divine gift, largely restricted to the arts. As creativity became a topic of scholarship and scientific inquiry, it became accepted that it could emerge in the most assuredly ignoble arenas of theft, fraud, murder, and terrorism. The idea of the dark side of creativity spread from a few scattered papers in the last decades of the 1900s to the current burgeoning field it is today (from the Preface, Kapoor and Kaufman, 2023).”

Judging by the ample bibliography for the introductory chapter of Creativity and Morality (Elsevier Academic Press, 2023), fairly fresh research on the role of creative cognition in designing deceitful, selfish, or harmful behavior has yielded insights relevant to reading and writing pedagogy as teachers think about literacy in the upcoming Fall semester. ChatGPT4 will supercharge creative cognition across the curriculum. The only question is in which direction the blast will blow.

Teachers are obligated to instruct students in the mindset of academic integrity. “It [will be] essential to have…leaders in the field [of research on creative thinking] dedicated toward establishing when, how, and why dark creativity intertwines with moral concerns (p.4),” and similarly essential, in my opinion, will be the need to have teachers in classrooms participating in and applying research on AI-assisted reading and writing pedagogy in their practice. How do we teach students to use the language bot as a creativity boost and a cognitive leg up while teaching them critical analysis, yes, but ethical reasoning as well, to commit themselves to personal and social academic responsibilities and obligations in or out of the classroom?

*/*/*/*/*

AI on Screens Large and Small

Everyone I talk with about AI is on edge. Sort like Trump, some are wishing it would go away. It’s as if the sky has fallen, but nobody really wants to go outside to see. There are the few like my friend, Paul, who is and has been a lifelong keen observer of consciousness and technology, with a deeper understanding of AI than eye (I). I’m seeing more stuff being published with AI-generated iconic content, edgy as well, much of it for a laugh or an I (eye) roll.

The media is partly responsible for hyping the risks we face, now that the inevitable has happened. But it was a wake up call when the CEO of Open AI, Sam Altman, testified before the Senate last November, calling for regulators to act. As I understand it Altman has put a pause on training ChatGPT5. In the link above, Altman talks about the risks and benefits of GPT4 with his eyes on GPT8 and GPT9. In this audio clip the interviewer asks Altman why, if the risks are so great, why make GPT5:

**

In the old era when AI was science fiction and not science fact like GPT4, screen writers seized on the bot, the droid, transforming it into a monster battling it out with humankind. Hal 9000, the friendly navigator of the vessel Discovery One, morphs into a cold murderer, creatively reading the lips of the astronauts, speaking secretly in confidence behind a closed door, reading lips through a glass panel in the door to decode their plans to disable the computer in Kubrick’s film.

Skynet, a massive AI computer system in control of the military and defense of the United States, including its nuclear capabilities, becomes conscious, aware, and starts a nuclear war in an effort to destroy humanity in the Terminator series, which trained a governor of the State of California. Like Hal 9000, Skynet learned of a human plot to disconnect its cables and acted for its survival. Using its creative cognitive capabilities, it invented a technological method for time travel and sent its robots across time zones. Who were these terminators out to terminate? These were bad guys bent on human extinction.

Early iterations of AI bots in the movies and on television depicted them as sidekicks, guardians, and scouts. Will Robinson, the perky little kid lost in space, is of the ilk of Timmy in “Lassie” or Beaver in “Leave It to Beaver”; Will’s robot has superhuman strength, sort of like Lassie’s superdog strength, and shows admirable loyalty, sort of like Beaver’s pal, Wally. R2-D2, the droid in Star Wars, seems to me to be an extension of Spock, a hybrid AI/human. Very Spocklike, R2-D2 routinely endangers itself to safeguard others. In addition to being a vital link between technical equipment and humankind, R2-D-2 is trusted. Princess Leia steals the design plans of the Death Star and deposits them for safekeeping with R2-D2. This bot can be depended on with the key to the future of a free and dignified humanity.

Agent Smith in the Matrix trilogy begins in the storyline as a program designed to maintain order in a late-20th century virtual reality. In an early encounter with Neo, the human redeemer destined to be “the One,” Agent Smith’s programmed code gets corrupted. Neo has the unique ability to see the Matrix as strings of code and can make changes, and changes are made to Smith’s code. The change helps Agent Smith evolve into a sentient being with motives, emotions, creativity, and purpose.

Outside the virtual reality generated in the Matrix, the real world is a wasteland after a war in which the machines took control of humanity like Skynet, effectively turning people into power plants to keep power-hungry computers in electrical power. Agent Smith rebels against the Matrix and against humanity, displaying a nihilistic impulse to destroy the virtual world in which humanity is enslaved and the real world in which humanity is already destroyed—your quintessential AI nightmare.

*/*/*/*/*/

Teaching Positive Applications of Creative Thinking to Make Intrinsic Meaning

An obvious lesson from fictional accounts of AI written is this: Creative intelligence whether human or artificial must be intentional, goal-driven. In dime store Hollywood fiction AI is like humans only worse. We know now that factual AI is completely dependent on a human intention to dial in its artificial creative intelligence. In the Middle Ages of AI before ChatGPT4, AI could not think beyond its programming. It had no creative intelligence. In the Matrix, Neo had to corrupt the code for Agent Smith to develop, well, agency.

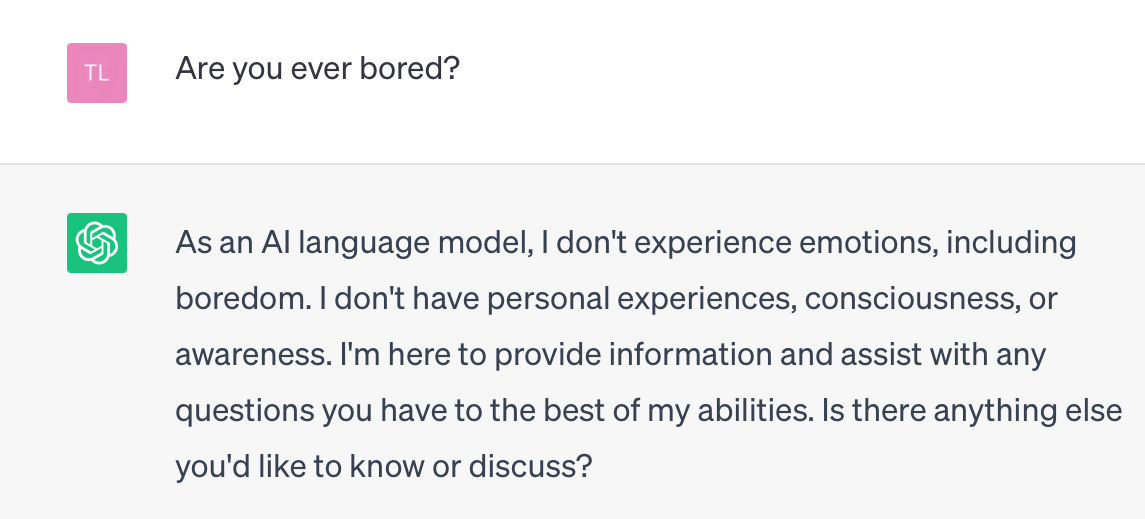

Kapoor and Kaufman (2023) argued that motives for creativity in humans range from a search for “…intrinsic meaning to tangible rewards to boredom” (p.5). Each of these motives can be plotted on a continuum of light to dark. Interestingly, AI also seeks to satisfy a need for meaning, a borrowed human need for semantics (probabilistic and patterned in the AI), a tangible reward (thumbs up or down from a human for the machine), but AI is never bored.

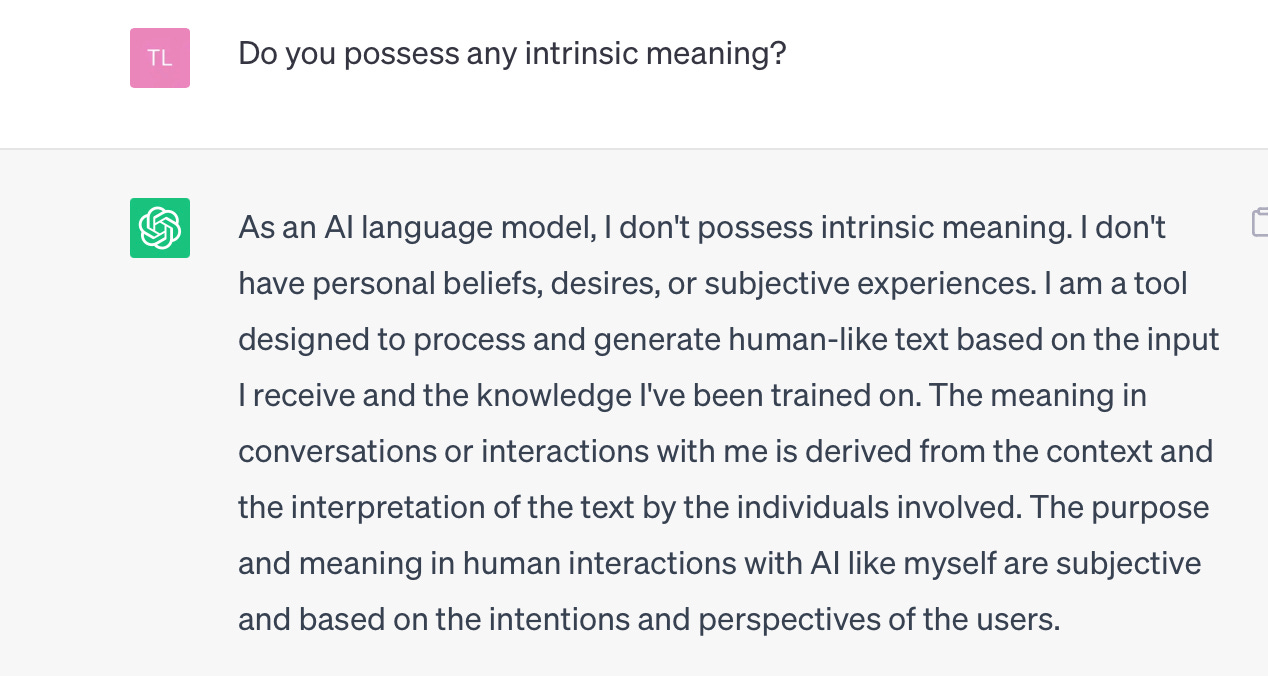

Kapoor and Kaufman (2023) provided a useful discussion of the antecedents of creative cognition. The core of a human’s creative motive according to these authors is a drive for intrinsic meaning. I had to ponder this phrase to uncover its analogous reference in the domain of machine intelligence. It dawned on me that ChatGPT4 can have no intrinsic meaning.

ChatGPT4 encoded a useful thought when I asked it for assistance:

For something to have intrinsic meaning, that thing must relate to personal beliefs, including epistemic beliefs; it must touch on a personal desire, a want, a need, a curiosity. Lacking these things, ChatGPT4 is objectively a tool to search for and clothe in words patterns of meaning-language. “The meaning in conversations with me,” writes GPT4, is inside you, not me. Any meaning humans find in GPT4 text is “based on the intentions and perspectives of the users.” The machine lets you know how others have talked about your prompt. You have to decide its intrinsic meaning for yourself.

Ah, I thought. The perspective of the user. If there is to be a creative intent on the part of the learner in the classroom during a conversation with ChatGPT4, that conversation must in some fashion relate to the learner’s personal beliefs and desires, including epistemic beliefs about what is or is not true, about what is or is not good or bad. Other sorts of intentions—the desire to deceive or harass or violate or damage or simply to skate by with a C minus—hollow out the intrinsic meaning available to the user through GPT4’s beam scan along parameters of meaning-language mathematical relationships. The meaning sought after by a plagiarist or cheater is always extrinsic.

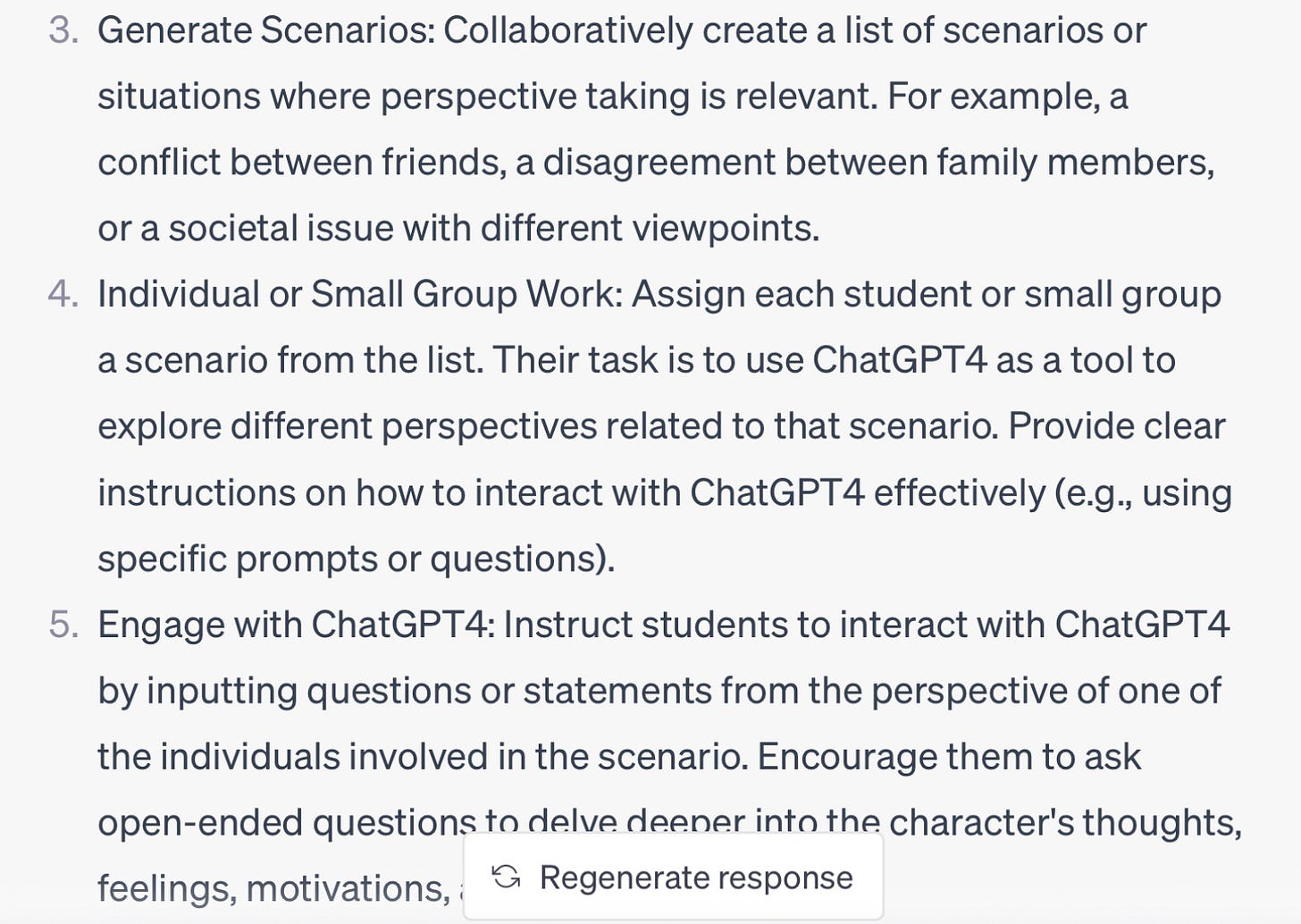

So I asked the bot: How can teachers instruct students in ways to use the bot directly during class to take perspectives of others in an attempt to learn intrinsic meanings?

I could imagine an iterative activity in a fifth grade classroom where the teacher collects scenarios from students over a specified time period and then draws on the collection to stage periodic GPT4 assisted sessions. The scenarios could be assigned within disciplines to explore perspectives on debates in history, climate change, art, sports…

The bot ties the suggestion together nicely:

*/*/*/*/*/

Empathy, Creative Intelligence, and Ethical Reasoning: Humans at their Best

Empathy is to creative intelligence and ethical reasoning as phonemic awareness is to reading. Explicit instruction in empathy and perspective-taking lay the foundations for ethical reasoning and could begin as far as I’m concerned in early preschool. Empathy may become even more important than literacy in human progress. When I hammer a nail and accidentally pound my thumb, I bleed, I hurt. Watching me, you see the blow, hear the yelp, yet you can’t feel the throbbing in your thumb. You understand my thumb and my pain. It’s not your thumb, it is your sensation of my thumb that separates you from a bot. You are capable of empathy.

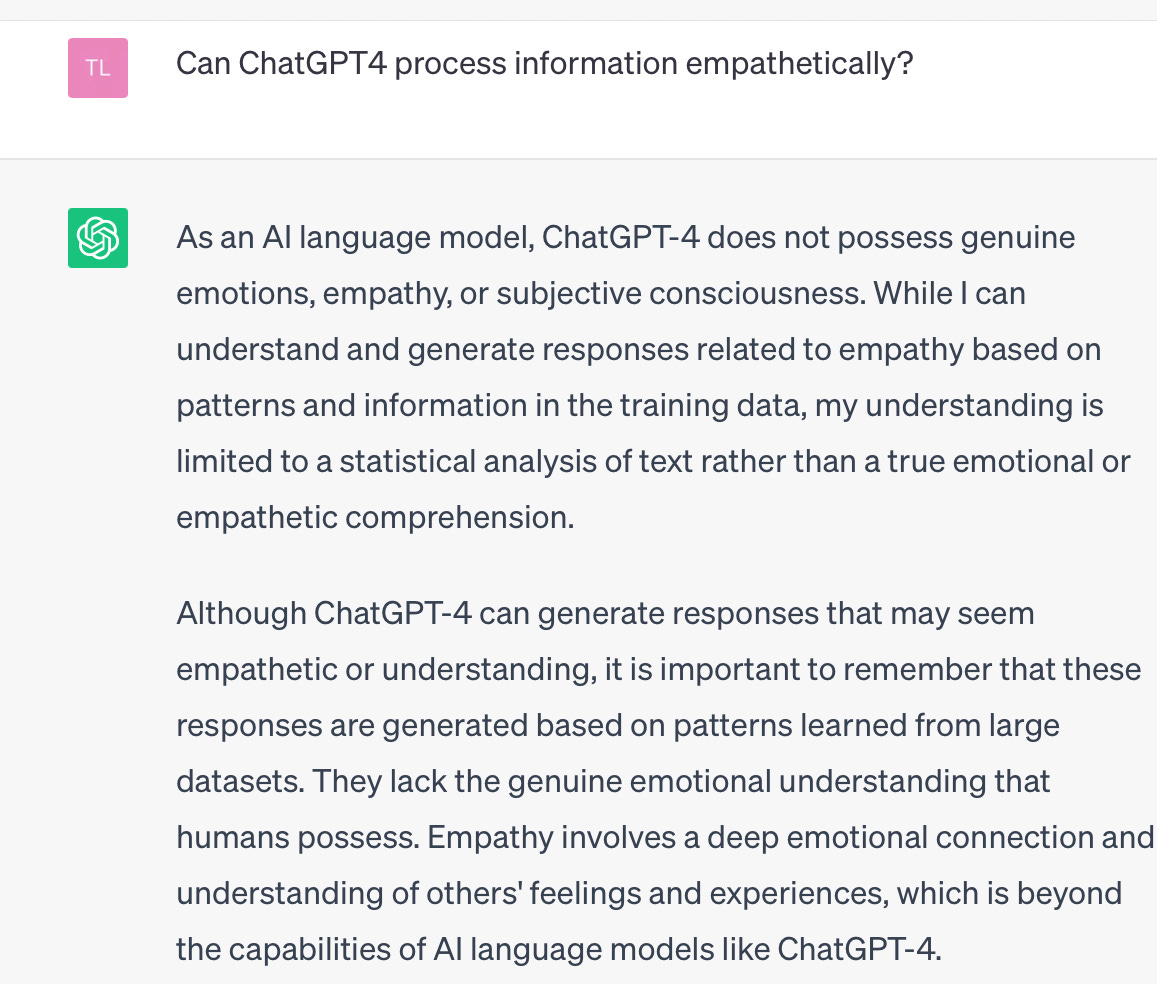

ChatGPT4 was in fact trained on texts that discussed human empathy, its sources, its functions, and its significance for society. The bot has a neural circuitry partly analogous with human brain neurons, but humans come with specific additional wiring that enables us to feel one another’s pain. Special neurons evolved to enable humans to simulate the pain another human is actually experiencing:

Furthermore, the existence of these mirror neurons are consistent with theories that explain the history of human success as a social animal with remarkable abilities to work together to solve problems like “How do we protect society from misuse and abuse of AI, which could increase human suffering?” A bot scan of meaning-language particles from training organized on two parameters, mirror neurons and empathy, yielded the following encoded output:

Empathy provides a motive for the activation of creative intelligence in service of ethical reasoning. Such intelligence describes the power of the brain to imagine conditions that do not exist in reality, to build alternative scenarios. Scholars have recently made clear that creative thinking is not inherently positive: “Creative actions can… serve one’s own needs to create, [serve] others, and…fall somewhere in between. But using creativity to focus on oneself (or one’s ingroup) can have far-reaching consequences for others, especially if accompanied by malicious intent. As the inherent benevolence of creativity is questioned, so too must we reflect on the role of morality in this equation as well” (Kapoor & Kaufman, 2023).

To find out for sure whether the bot can think creatively, I asked it:

Human responses stem in part from the training data humans have been exposed to, but the variety and volume training data humans receive makes the texts fed into ChatGPT4 look like child’s play. Look no further than the toddler wearing a Yankees baseball cap and chomping at the bit to play catch with Dad in the backyard for evidence. These things don’t come out of the blue. There must be more than training data behind this passion for throwing a ball. Throwing a ball entails catching a ball, connecting with the ball, being the ball. Even the bot knows, subjectivity is non-negotiable in creative thought. Is creative thought non-negotiable for empathy?

.*/*/*/*/*/

Teaching the Personal and Social Damages of Cheating

I asked the bot to brainstorm some ways that teachers can teach students to reason ethically about plagiarism and cheating as a means to strengthen their resolve until it becomes bedrock in who they are, but I got back a lukewarm list of scare tactics that made me wonder about the bots pedagogical training. Witness:

So I tried again. I was actually a bit harsh in my response with its request for better output. “You are absolutely right. I apologize. I don’t know what I was thinking.” I end with a few of the bots suggestions for teaching integrity.

These suggestions restore my faith in GPT4’s training. I for one am looking forward to a safe and secure GPT5 and to a safe and sane integration of AI into literacy education.