Physical Libraries In Simulated Neighborhoods

All images created from the text sections pasted into DALLE-3 via Chatbox2

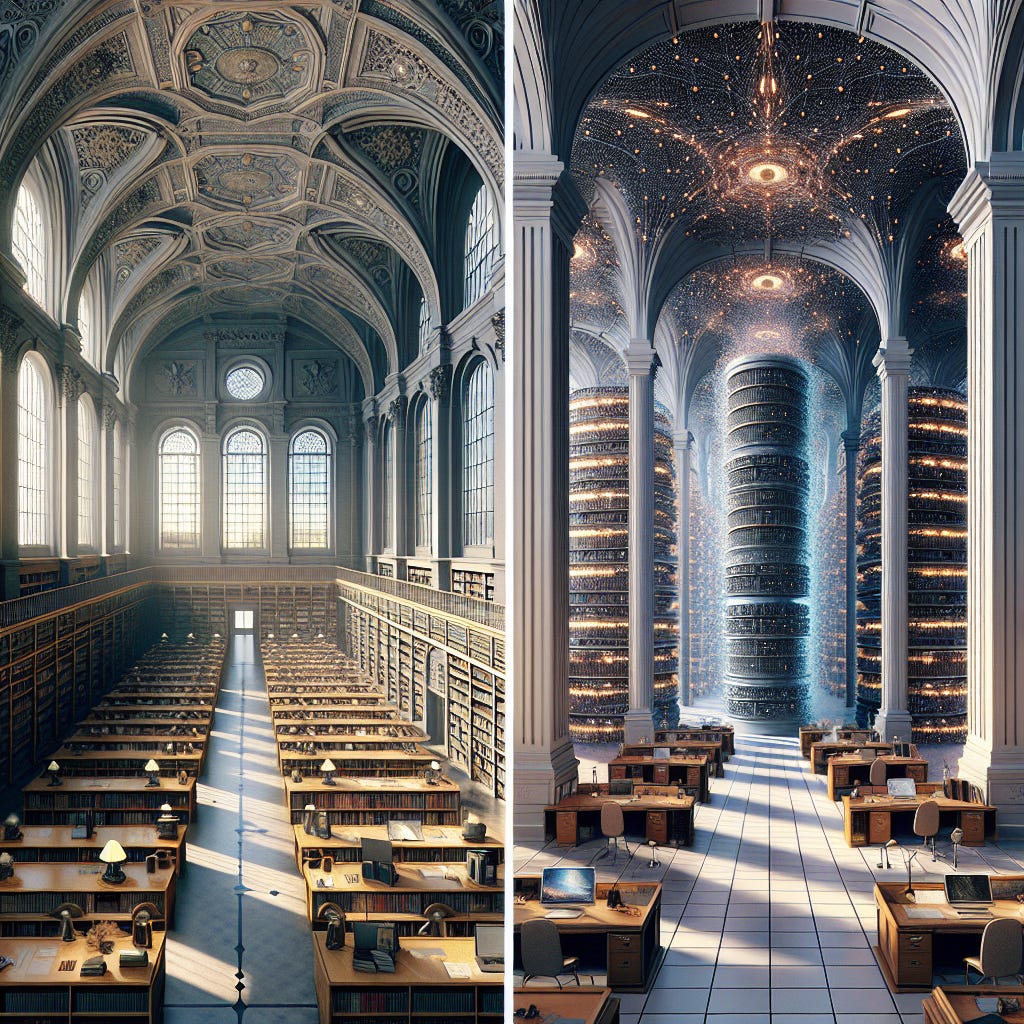

Roll up for the mystery tour! My plan is to journey through the mental space of a physical library and translate the experience into the digital space of a bot. At the end, I offer some Monday Morning suggestions to try to make this something more than an academic exercise—not that I mind academic exercises.

The Physical Library

In the grand Doe Library's North Reading Room at UC Berkeley, afternoon light filters through the arched Beaux-Arts windows, illuminating the space where generations of scholars have studied. The room's vaulted ceiling and classical details reflect its origins as part of the Neoclassical-style library completed in 1911 designed by Émile Bénard after winning an architectural competition in 1900. The library serves as the gateway to the Gardner (Main) Stacks, a vast underground structure housing 2.3 million volumes across 52 miles of shelves.

Nearby, the Bancroft Library, located in the Doe Annex, holds one of the largest special collections in the United States. Acquired from Hubert Howe Bancroft in 1905, its holdings include rare books, manuscripts, and archives documenting California and Western American history. Among its treasures are medieval manuscripts, incunabula (books dated before 1501), and literary papers from writers like Mark Twain and Jack London. Unlike the circulating collections of Doe Library, Bancroft’s materials remain pristine in archival housing, consulted sparingly by specialized researchers.

The Machine Library

In the algorithmic realm, the Library pulsates as electric currents race through silicon pathways, transforming abstract mathematics into simulated knowledge. Billions of transistors fire in orchestrated patterns as attention mechanisms light up neural connections like Berkeley's afternoon sun. The most frequently accessed texts leave physical traces—actual electron pathways strengthened through repeated activation, their patterns etched into memory chips like footpaths worn across a quad from almost two century’s of students traversing the same route.

Unlike Berkeley's unchanging architecture, the simulated library rebuilds itself with each query, circuits heating measurably as computation intensifies. Temperature sensors register the hotspots where popular information resides. The 98% recall accuracy for certain texts causes notable power spikes on server racks, while rarely-accessed information sits in cooler, distant storage sectors. The weighted parameters create a physical hierarchy of accessibility—some knowledge requires milliseconds to retrieve while other facts demand seconds, mirroring the difference between reaching for a reference desk book versus requesting a vault-stored incunabulum.

***** *****

Library of Congress Structure

Developed at America's own library in the late 19th and early 20th centuries, the Library of Congress Classification (LCC) system provides an intellectual framework for organizing scholarly collections. Unlike the decimal-based system familiar to public library users, the LCC uses 21 alphabetical classes representing major academic disciplines—from Philosophy to Military Science to Literature.

Each item receives a distinctive alphanumeric signature combining letters and numbers in a precise hierarchy. First comes the class letter (like "P" for Language and Literature), followed by subclass letters. Next, numerical codes from 1-9999 pinpoint exact subject areas. The "Cutter number" follows, arranging works alphabetically by author within their subject category. Publication year typically completes the sequence, ensuring each volume has its unique address on the shelf.

This classification ecosystem flourishes in academic environments like UC Berkeley's Doe Library, where specialized collections require the LCC's affordances and flexible architecture. While complex at first, the system reveals itself as a logical map to knowledge—guiding researchers through adjacent intellectual territories where related ideas cluster together.

Embedded Algorithmic Space

In the bots silicon architecture, memory is physically organized by access patterns—popular data clusters on fast SSD cache sectors where electron paths have been reinforced through millions of retrievals. This hierarchy can be seen through measurable heat signatures: high-traffic information generates thermal hotspots on server racks, while dormant knowledge remains cool in distant storage arrays. Even the copper traces on memory modules show microscopic wear patterns where electrical signals repeatedly travel the same routes.

The mathematics of attention mechanisms creates actual physical neighborhoods, too—data isn't randomly distributed but strategically positioned based on co-occurrence. The most-referenced knowledge occupies prime real estate on the fastest memory channels with direct pathways to processing cores, while energy-saving algorithms automatically relocate rarely-accessed information to slower, power-efficient storage tiers. This stratification isn't abstract—it's visible in power consumption spikes that occur when popular information blocks are summoned from their privileged positions.

***** *****

The Archive Access

In the library basement, special collections hold rare manuscripts requiring formal requests and white gloves to access. Their limited circulation means few scholars encounter them; their influence is contained within specialist domains. Their preservation is inconsistent—some meticulously maintained, others slowly deteriorating from neglect.

The Long Tail

At the edges of simulated knowledge lies the "long tail" characterized by sparse and fragmentary representations. This phenomenon reflects limited exposure during pre-training to rare or infrequently encountered information. The bot may recognize titles, author names, or isolated facts about such works, but its output is often fluff, the kind that waffles, lacking both specificity and depth—for instance, it may fail to recall intricate character relationships or plot details. A year ago, I saw Jayne Eyre appear in Act II of Hamlet.

Efforts to address this limitation include retrieval-augmented generation (RAG) and frameworks like Logic-Induced-Knowledge-Search (LINK), which systematically generate and incorporate long-tail knowledge into models. These methods enhance performance by patching gaps in the model's understanding, ensuring that rare knowledge is not relegated to peripheral representations but becomes accessible for reasoning and inference tasks. Despite these advancements, long-tail knowledge remains a problem for language machines’ exploratory and generalization capabilities.

“…We estimate that today's models must be scaled by many orders of magnitude to reach competitive QA performance on questions with little support in the pre-training data. Finally, we show that retrieval-augmentation can reduce the dependence on relevant pre-training information, presenting a promising approach for capturing the long-tail” (Kandpal et al., 2023).

Cultural Memory

Given recent ideological attacks on top-tier universities from the Trump administration, what I’m about to report may be incorrect. At Harvard's Houghton Library, at least for now, there is explicit recognition of historical collection imbalances. Their Early Books and Manuscripts department asserts that its "…historical focus on white men of European origin…" is a problem and is actively working "…to more fully represent the lives of those in other areas and of other ethnicities…" while seeking to "…acquire works by and for women and materials that document the history of LGBTQ people." Harvard Library’s acknowledgment is evidence of growing institutional awareness of how collection policies reflect and perpetuate power structures.

The Training Imbalance

"Speak, Memory: An Archaeology of Books Known to ChatGPT/GPT-4" (Chang, Cramer, Soni, and Bamman, 2023), revealed dramatic disparities in character name accuracy, suggesting a long tail problem: 24.4% for pre-1923 LitBank works and 23.5% for Science Fiction/Fantasy, compared to only 2.0% for Global Anglophone texts and a 1.7% for BBIP/BCALA (Black Book Interactive Project and Black Caucus American Library Association) award winners.

This digital archaeology exposed how large language models actively reinforce and amplify existing hierarchies of knowledge. The Library of Congress Classification system's physical organization of literary works finds its digital symmetry in the differential treatment of texts within AI training data. Library collections privilege certain literary traditions over others through space allocation and acquisition budgets, language models encode these same biases in their architecture through selective exposure to texts.

When we consult bots, we're participating in this reproduction of bias. The problem is, when we visit the physical library we participate in the same reproduction. Most of us reject the solution of boycotting the library. When we teach the canon, we participate, and this discussion is fraught with problems. The Berkeley researchers identified troubling implications of this "lack of diversity" in what AI systems have memorized, noting serious "…downstream effects of ChatGPT's demonstrably limited background in terms of what it does and doesn't 'see' when we ask it for things." Whether physical or digital, the classification systems we apply shape scholarly engagement—what's easily accessible becomes what's frequently studied.

The researchers found that bots memorized works readily available online—predominantly public domain works from Project Gutenberg and popular genre fiction with "nerd appeal" often quoted online. Meanwhile, works from marginalized literary traditions remain unrepresented in training data just as they've been historically underrepresented in prestigious library collections.

This technological reproduction of academic power has profound implications for knowledge access. When an AI assistant can fluidly discuss Western literary classics but struggles with Global Anglophone literature or works by Black authors, it effectively reinscribes the colonial knowledge hierarchies embedded in library classification systems. The digital infrastructure of AI thus becomes an invisible extension of the physical infrastructure of academic libraries—both reflecting and reinforcing which intellectual traditions are deemed worthy of preservation and study.

These findings illuminate how technological advancement, without conscious intervention, tends to calcify rather than disrupt existing power structures in knowledge production. Just as university libraries at Harvard and Berkeley must actively work to counterbalance historical collection biases, AI systems require deliberate reconfiguration to avoid perpetuating digital canons that marginalize non-Western and minority literary traditions.

***** *****

For Next Monday Morning…

Conduct accessibility audits: Have students map their school or local library, documenting which sections receive the most space, light, and prominent positioning. Compare the physical footprint of Western canonical works versus Global Anglophone texts.

Create "path of resistance" exercises: Assign students to locate specific works by authors from different traditions, tracking time required and obstacles encountered. Use this data to visualize knowledge accessibility patterns.

Examine collection development: Invite the school librarian to discuss acquisition policies, budgets for different literary categories, and historical imbalances in the collection.

Test AI knowledge gaps: Design experiments where students test language models with character names from diverse literary traditions, recording accuracy rates for Western canon versus Global Anglophone texts versus BBIP/BCALA works.

Visualize the "long tail": Create classroom displays showing the distribution curve of AI accuracy across different disciplinary traditions, helping students understand why certain kinds of knowledge appear fragmentary or erroneous.

Predict hallucination vulnerability: Teach students to anticipate when AI systems might hallucinate based on documented knowledge gaps, developing critical assessment skills for digital information.

Compare retrieval challenges: Design parallel research assignments using physical libraries and AI tools, documenting which knowledge traditions require more effort to access in each system and which systems result in more confidence in the findings.

Document "heat signatures": Have students track which materials show physical wear in libraries and which generate confident responses from AI, creating a comparative analysis of knowledge hierarchies.

Curate counter-collections: Develop classroom resources that deliberately highlight works residing in the "long tail" of both physical and digital knowledge systems.

Analyze classification consequences: Examine how Library of Congress categories place certain traditions in peripheral positions, then identify how these same patterns manifest in digital information systems.

Please share your results with me if you try any for these. Or if you’d like to explore them in the discussion section with other readers, by all means take this opportunity. These kinds of tasks might help make abstract concepts tangible for students, helping them develop critical awareness and practical strategies for navigating knowledge systems with deeply embedded biases and highly variable percentages of accuracy from one word to the next word in its output. Passively taking in information becomes active investigation into how knowledge is structured, preserved, and prioritized across physical and digital environments.