Making the Strange Familiar

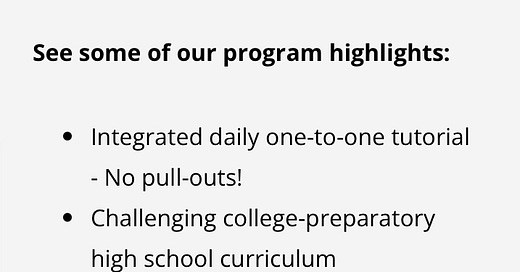

I’m convinced the Knowledge Matters Campaign is a Trojan Horse inside the SoR ©️ citadel. For one thing, I recently saw an EdWeek article about morphological analysis, a knot way higher on the famous reading rope than phonological analysis. What do you know about morphological analysis? was the headline. I’ve heard mention of morphological business in SoR ©️presentations on YouTube, but never a fulsome focus on the relationship between word structures and meaning. What would you think if you came across the following advert for a private school?

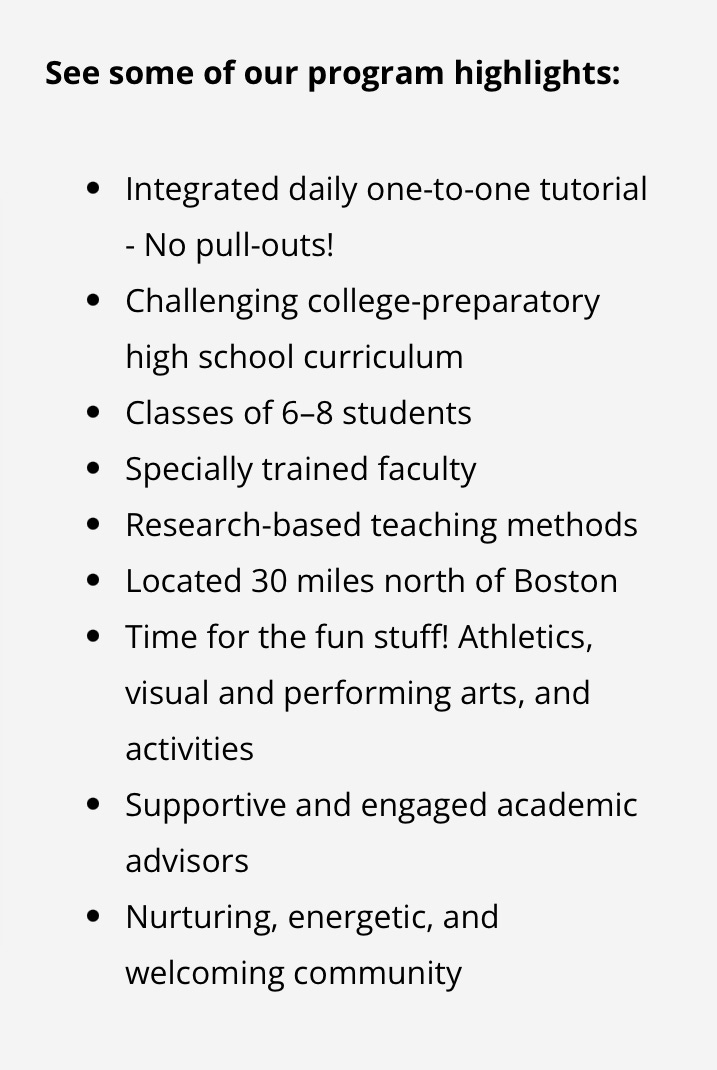

This advert comes from the school operated by Jeff Clark, a self-described dyslexic who appears to have created an educational space for kids who have a hard time decoding without centering decoding. The traditional Orton-Gillingham method is likely lurking in the background, but it isn’t held up as the core of the curriculum. Instead, we have “nurturing” adults, “engaged” advisors, “fun stuff,“ small classes, college prep. What gives, Josh Clark?

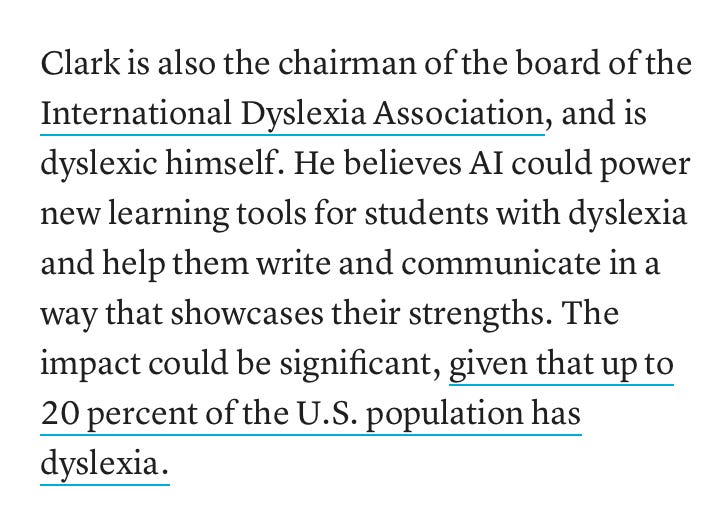

If I were trying to accomplish what Jeff Clark is doing, I would be interested in AI as well. Note that despite class sizes of 6-8 and one-on-one human tutoring, critical when you’re working with kids who have a hard time learning to decode, there is still room for AI. Clearly, this school is pricey with a playground made from wood and steel instead of bald automobile tires sticking up from the dirt, perhaps explaining the inflated percentage (20% seems like Trump’s 30,000 square feet apartment). All the same, according to the EdWeek article quoting Clark based on a zoom interview, the idea that the traditional system does not serve all children is verifiable. Plus I personally agree that AI is going to challenge the system. Whether it turns out for the good of all is an open question. AI will be pricey, too. Here’s Jeff:

Jeff may be overselling his product by fixating on AI and shame reduction. I personally don’t think AI’s strong suit is its current program design prohibiting it from making negative comments about users, but it’s a fact. AI as it is currently designed doesn’t shame, but it could easily learn to shame if its trainers wanted it to. His argument seems a bit self-serving and incomplete:

Clark also draws on a controversial issue in developing his point when he describes the discrepancy model of diagnosis. Two standard deviations separating IQ from reading achievement, the longstanding criterion for a dyslexia diagnosis, produced the myth that only smart children who can’t decode qualify for special help. Nonetheless, the point he is making by modus ponens is sound. It’s possible that children who have difficulty writing can write better without plagiarism with AI help, I think. This question awaits research and better definitions.

Clark is right on theoretical target with the following hypothetical. I’m not sure the scenario he presents is easy to establish in practice. Basic instruction and practice with the bot in first grade will be crucial if learners are to build the mindset that the bot is a mechanical tool, not a human. Also, a steady diet of writing for an examiner audience doesn’t often position student writers to be able to say “This is the kind of thing I want to say.” More often it’s “this is the kind of thing my teacher wants me to say”:

All in all, I personally feel the tremors of a paradigm shift in literacy instruction beginning as reading as meaning-making regains a foothold in the SoR ©️ religion and the International Dyslexia Society by way of Jeff Clark advocates for AI. I’m troubled by the prospect of AI embraced among the affluent and withheld from the unwashed masses. Keith Stanovich and Billie Holiday taught us about the Mathew Effect long ago: “Them that’s got shall get, them that’s not shall lose…So the Bible says, and it still is news…” I’ve got a lot of thinking to do about the matter. In the meantime, I’ll have to discuss the topic with AI—unless some of you reach for the comment button.

*****