For this post I was interested in developing a way to open up discussion about basic human reading comprehension, nothing fancy, reading a newspaper article, in the context of large language model interactions. Teachers together might discuss this following language and create their own local definition.

Teachers might do this same activity with students, working toward a shared understanding of very basic comprehension as close as possible to the text. We’re not talking about critical or creative comprehension, dialogic comprehension—basic literal comprehension to the degree such a thing exists.

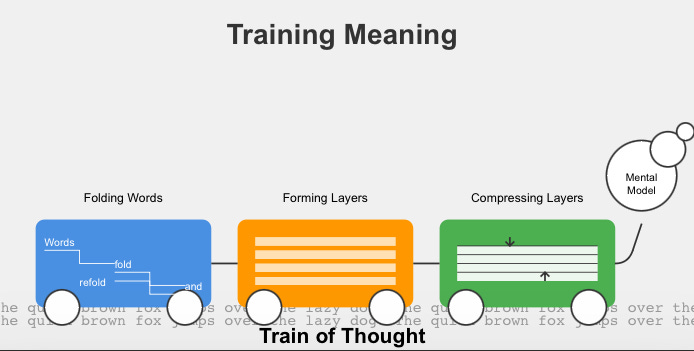

“Words fold and refold onto themselves to fashion layers of ideas which we link together to form a train of thought running through consciousness. As the train moves along the tracks of the text, it forms and reforms layers, then it compresses them into one laminated unit. In this way the train of thought eventually reaches its destination.”

***

I gave this definition to Claude and asked the bot to diagram the sense it made of the words. This diagram might be productive to use in an extension of the first suggestion.

***

Finally, I asked the bot to discuss how this notion of human basic literal comprehension mirrors AI basic comprehension. I’m not exactly sure how I would use it in either a professional development or a classroom context, but I’m pretty sure it might generate some insights. It did for me.

All of the rest is Claude:

Technical Interpretation of the "Training Meaning" Diagram:

1. Input Processing (First Car - "Folding Words"):

This stage represents the initial parsing and tokenization of textual input. The "folding" metaphor illustrates the process of lexical analysis and syntactic parsing, where individual words or tokens are identified and their relationships are established. This can be likened to the creation of a parse tree or an abstract syntax tree in natural language processing.

2. Feature Extraction and Embedding (Second Car - "Forming Layers"):

The layers formed in this stage can be interpreted as the creation of feature vectors or embeddings. Each layer potentially represents different levels of abstraction or different types of features extracted from the input. This could involve techniques such as:

- Word embeddings (e.g., Word2Vec, GloVe)

- Contextual embeddings (e.g., BERT, ELMo)

- N-gram features

- Part-of-speech tagging

- Named entity recognition

3. Dimensionality Reduction and Information Compression (Third Car - "Compressing Layers"):

This stage represents the process of condensing the extracted features into a more compact and informative representation. This could involve techniques such as:

- Principal Component Analysis (PCA)

- t-SNE (t-Distributed Stochastic Neighbor Embedding)

- Autoencoders

- Attention mechanisms in neural networks

4. Semantic Representation (Final Bubble - "Mental Model"):

The end result of this process is the formation of a semantic representation or a "mental model". In machine learning terms, this could be interpreted as:

- A learned latent space representation

- A knowledge graph

- A set of weighted concepts in a semantic network

- A fine-tuned language model capable of generating contextually relevant outputs

The overall process illustrated in the diagram aligns with the concept of representation learning in artificial intelligence, where raw input data is transformed into a format that can be effectively exploited in machine learning tasks.

The "Training Meaning" title suggests that this process is part of a learning algorithm, potentially involving iterative optimization to improve the quality of the final semantic representation. The "Train of Thought" metaphor implies a sequential, potentially recurrent process, where each stage builds upon the outputs of the previous stages.

This diagram could be describing the internal mechanics of various natural language processing models, particularly those involving deep learning architectures such as recurrent neural networks (RNNs), long short-term memory networks (LSTMs), or transformer models.