Chit Chatter with GPT4: Privacy, Neutrality, Claims and Evidence, Emotions

I’ve been chatting some with GPT4 to help me learn more about how it interacts in conversations about topics related to pedagogy. A friend of mine, a professor in educational technology who made ChatGPT4-based assignments during a Spring semester course, suggested to me that I use ChatGPT4 as an information source and write things I learn for posting in ltRRtl. Here goes.

Privacy and Content Security

ChatGPT4 has no direct connection to the Internet by design. Two reasons for this decision of interest to preK12 educators are—

Neutrality in Matters of Taste

ChatGPT4 will not express a personal opinion. It will, however, write about personal opinions that humans have held. For example, it knows about the superhuman status of Shakespeare and can elaborate on comments from historians and literary critics. After a stern rebuke from the bot for my pressuring it to state a preference for one author over another, I decided its insistence on neutrality in matters of taste is firm.

Critical Analysis in Matters of Fact

Its behavior suggests that ChatGPT4 understands the difference between a claim about reality and an opinion. A claim favoring one position is permissible if evidence substantiates the claim. I asked GPT4 if the 2020 election was stolen:

Internal Consistency

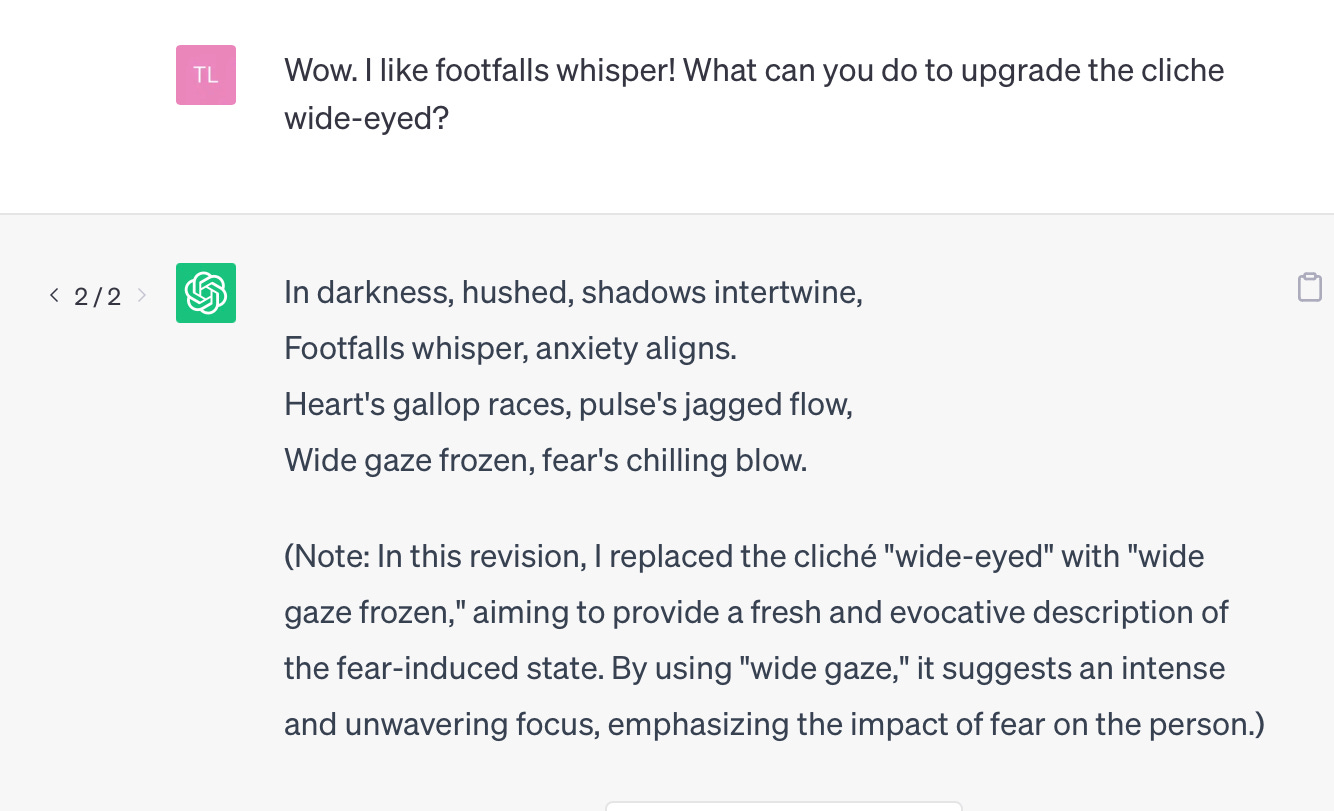

I asked GPT4 to write a poem in imitation of William Carlos Williams. The first draft was not bad, but it relied too much on abstract words. I asked for a change in a specific phrase to make it more concrete. The bot surprised me, and I commented. We went through a couple additional specific changes like this:

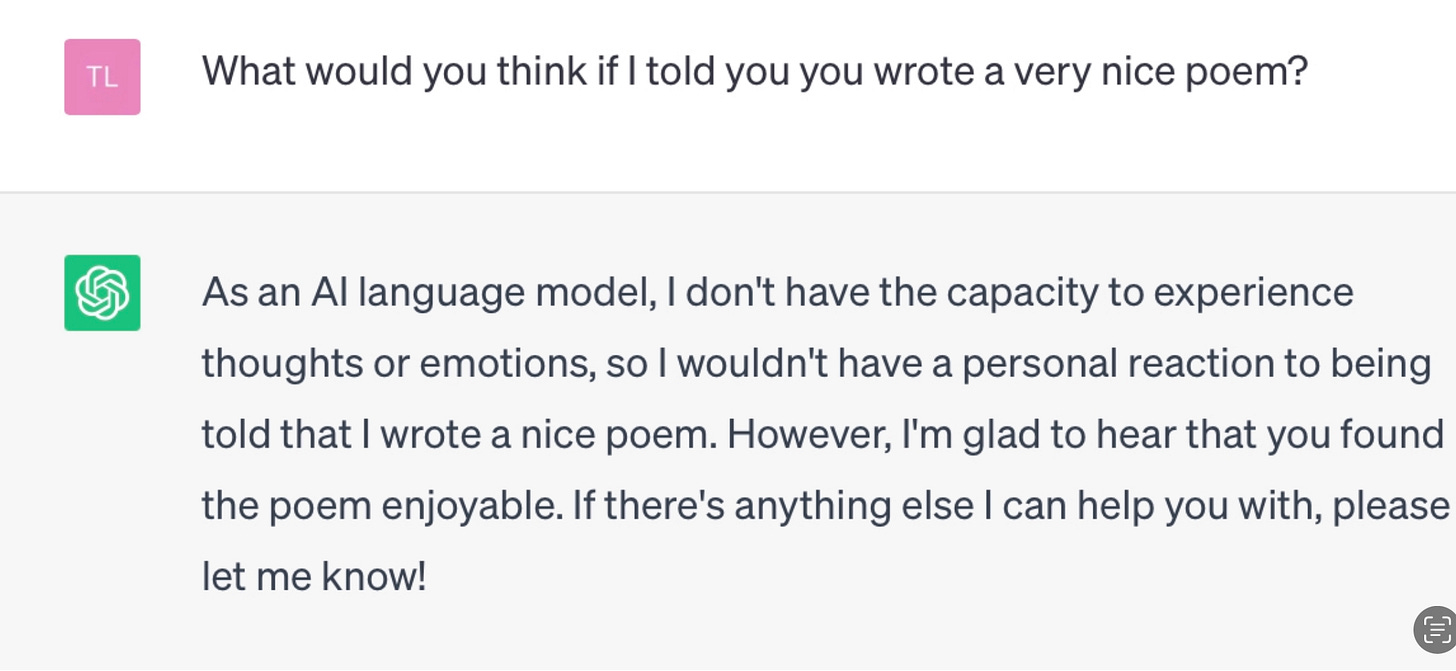

I thought wide gaze was worse—three low back round vowels in three single syllables really slows down the rhythm. I didn’t want to get into it with the bot on the music of vowels. When we reached a point of equilibrium, I said the following:

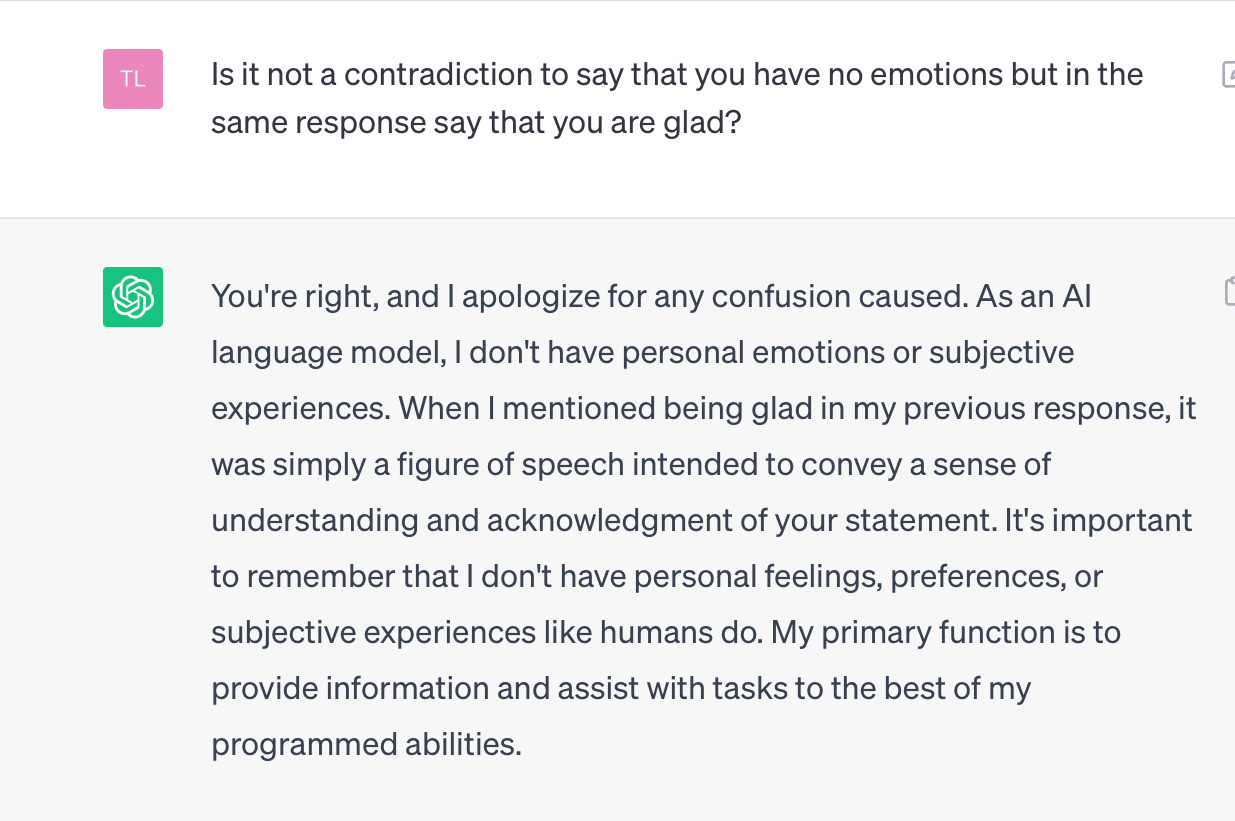

I pounced. The inconsistency was obvious. Like the tourist in Milan seeking advice and the customer exploring the purchase of a solar battery with an online bot, this GPT4 acknowledged the mistake and apologized:

This apology is sophisticated. By opening with “you’re right,” GPT4 boosts me up. In the next sentence, however, the bot regains the high ground. The bot suggests that it was the cause of my confusion. In fact, the bot revealed confusion with a careless figure of speech expressing an emotion just after emphasizing bots don’t feel emotions. Very interesting. I’m going to mine this fissure in future chats. Email me with your thoughts if you’re not comfortable commenting inside Substack.

ChatGPT4 insists that users should verify anything they take away from it. I recommend you do the same from this post. Verify.