The original APGAR (1952) stands as a masterclass in authentic assessment, a rubric used by nurses and midwives wherever babies are delivered.

Doctors use APGAR scores to make real-time, consequential decisions about interventions for newborn infants to smooth the transition from the womb to the world.

The APGAR Narrative Rubric for Authentic Assessment offered here for discussion purposes uses the APGAR model as an inspiration for distinguishing levels of authenticity in classroom assessment practices.

Rubric Framework

The APGAR three-tiered framework for authentic assessment examines how closely educational assessments mirror the exemplary strengths of the APGAR approach to infant assessment, strengths which make the assessment authentic:

systematic observation

clear criteria

expert judgment

timely application

actionable results

While Level 1 on the rubric denotes assessment practices that, like pre-APGAR medical observations, rely primarily on unstandardized impressions, Level 3 depicts assessment approaches that fully embrace the principles that made APGAR revolutionary:

1) structured observation protocols,

2) clear scoring criteria, and

3) direct links to intervention.

This Narrative Rubric helps educators evaluate and enhance the authenticity of their assessment practices while maintaining the rigor and reliability that make APGAR-like systems so valuable at local scale in professional practice.

Core Components of Authentic Assessment

Systematic Observation

Just as the APGAR score requires nurses to methodically assess five key indicators of newborn health, authentic classroom assessment consists of structured protocols for observing students at work in order to learn to teach them.

Learners become informants, observing themselves, keeping records to better understand their growth and to help you better understand what they are doing as they work.

Instead of relying on random or opportunistic observation, assessment protocols follow a deliberate sequence of activities, providing space and access for all learners to the community of learners to feedback.

This systematic approach ensures that no critical indicators are missed and that observations are comprehensive rather than fragmentary. Grounded in a mentor model of instruction, the benefits for the learner motivate exertion of effort and engagement in activity.

Clear Criteria

Authentic assessment requires clear criteria that describe what an expert assessor would notice upon observing a performance. A common misconception confuses descriptive criteria with prescription. A criterion is added not because someone in authority would like to see more of it, but because experts find it salient in authentic learning and worth noting.

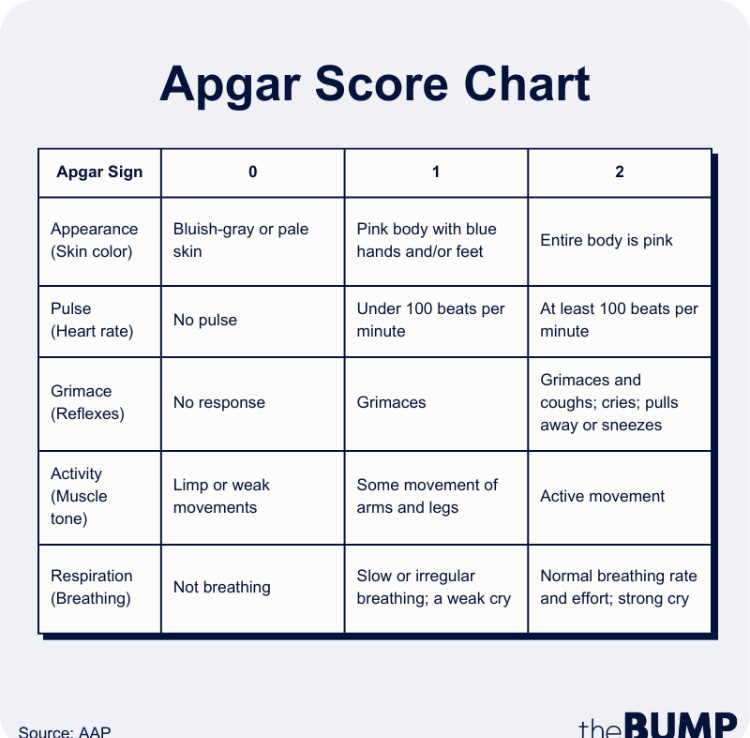

Authentic assessment frameworks coordinate attention on a shared set of features—skin color, pulse, breathing, etc.—and provide shared vocabulary for communication. Rubric criteria for classroom use establish distinctions between levels of performances expert teachers see and grasp in their own students’ work. Criteria are not speculative, aspirational, vague, nor imagined: A pink trunk with blue extremities, a pulse under 100. These are real, visible, available to anyone with eyes to see.

These criteria must be specific enough that different observers would reach similar conclusions under similar circumstances, yet flexible enough to accommodate the complexity of student learning. The power lies in creating a shared language for discussing student learning anchored in concrete teaching experiences, much as APGAR created a universal terminology for newborn health.

Expert Judgment

The role of expert judgment in authentic assessment is twofold. Criteria for scoring student work processes and products should be written collaboratively by expert judges. Then raters have anchors to match with their own expertise.

Anyone could use the APGAR framework and score an infant. But anyone’s score may or may not have value in reliably communicating the type of intervention a baby needs. Linking observations, judgments, and actions reliably and effectively is the core of assessment expertise.

Timely Application

.The timing of authentic assessments should derive organically from learning sequences of actions and provide preplanned opportunities to identify and affirm upcoming lesson plans activities vis a vis student needs while there is still time to intervene. This temporal dimension distinguishes authentic assessment from end-point evaluation, emphasizing its main role in supporting learning, secondarily in measuring it.

Actionable Results

The APGAR score's greatest strength lies in its immediate connection to medical intervention—a score below 7 triggers specific “what next’s.” Similarly, authentic classroom assessment ought to generate results that link to micro and macro instructional decisions. This direct link between assessment and action ensures that the evaluation serves a practical purpose in fostering student growth rather than simply documenting a score and moving on.

SCORE POINTS

Assessment practices in education tend to evolve through three distinct levels, representing a deepening of professional vision and systematic observation.

I

At the most basic level, we find what might be called the pre-APGAR approach—assessment driven primarily by individual instinct and unexamined habits.

Here, teachers rely heavily on personal gut feelings, simple point systems, and idiosyncratic terminology. Terms like "lazy" or "didn't try" populate feedback, reflecting a lack of standardized professional language.

This approach, while natural, often leads to inconsistency across classrooms and potential conflicts with learners who struggle to understand grading decisions. Without systematic observation structures, critical indicators of learning may be missed, and assessment timing often feels arbitrary rather than strategic.

II

As teachers begin moving toward more systematic practice, they enter what we might call the potentially APGAR stage. Here, we see the emergence of more structured observation, often initially motivated by external demands or special cases requiring documentation.

Teachers at this level start incorporating multiple indicators of student engagement and learning; tracking attendance patterns, maintaining regular contact with learners and families, and developing some standardized terminology for describing student behaviors.

While still evolving, this stage shows promising signs of professional growth: departments begin collaborating on shared academic missions, teachers start using basic quantification tools like checklists, and there's growing recognition of the role of expert clinical judgment in educational decisions.

The academic culture provides some flexibility in curriculum implementation while maintaining reasonable autonomy from standardized testing pressures. Teachers are developing an interest in learning more about responsive pedagogy as opposed to direct, explicit teaching.

III

The fully realized APGAR approach represents the highest evolution of professional vision in educational assessment. Here, all teachers systematically evaluate each learner using field-tested, collectively agreed-upon methods, genres, and rubrics that examine crucial dimensions of learning: emotional well-being, excitement for learning, comfort with challenge, community engagement, and knowledge-building mindset.

Like its medical counterpart, this approach features precise timing, clear numerical scoring, and locally discussed professional terminology that facilitates rapid communication between colleagues. Assessment becomes truly actionable, immediately identifying intervention needs while documenting both current status and responses to educational transitions.

What makes this highest level truly transformative is its integration of systematic observation with expert professional judgment leading to more effective pedagogical actions.

Teachers maintain constant attentiveness to their learners while conducting these assessments. The approach creates a shared professional vision across classrooms and schools, driving specific interventions when assessment indicates need. Most importantly, it requires and develops true expertise, i.e., highly skilled observers with reflective judgment and deep experience in student assessment.

This evolution from intuitive to systematic authentic assessment doesn't diminish teacher autonomy. Rather, it provides greater autonomy through confidence that judgment leads to optimal choices of action.

Collaborative and collegial authentic assessment development creates a space where expert insight can amplified across classrooms while maintaining the unique humanity and immediacy of the teacher-student relationship.

Thanks Terry for making this connection. As the father of a "blue baby", I am grateful for this simple technology that has saved many lives. Likewise, a well-designed and collaboratively applied rubric can lead to feedback that can support student growth and improve teachers' practice.

Your post reminded me of an article in The New Yorker by Dr. Atul Gawande, "The Score": https://www.newyorker.com/magazine/2006/10/09/the-score

He notes that for all of the benefits that the APGAR score has brought to this field of medicine, there have been some negative outcomes. For example, doctors are more likely to go to a C-section out of fear of natural birth challenges, even though a C-section may increase the risk to the mother. Gawande refers to this as "the tyranny of the score".

Do you see any challenges or negative repercussions from the use of rubrics for evaluating student work?

This is great, Terry!!